detailedwindtour

windtour 时间:2021-05-25 阅读:()

MarkovchainMonteCarloMachineLearningSummerSchool2009http://mlg.

eng.

cam.

ac.

uk/mlss09/IainMurrayhttp://www.

cs.

toronto.

edu/~murray/AstatisticalproblemWhatistheaverageheightoftheMLSSlecturersMethod:measuretheirheights,addthemupanddividebyN=20.

WhatistheaverageheightfofpeoplepinCambridgeCEp∈C[f(p)]≡1|C|p∈Cf(p),"intractable"≈1SSs=1fp(s),forrandomsurveyofSpeople{p(s)}∈CSurveyingworksforlargeandnotionallyinnitepopulations.

SimpleMonteCarloStatisticalsamplingcanbeappliedtoanyexpectation:Ingeneral:f(x)P(x)dx≈1SSs=1f(x(s)),x(s)P(x)Example:makingpredictionsp(x|D)=P(x|θ,D)P(θ|D)dθ≈1SSs=1P(x|θ(s),D),θ(s)P(θ|D)Moreexamples:E-stepstatisticsinEM,BoltzmannmachinelearningPropertiesofMonteCarloEstimator:f(x)P(x)dx≈f≡1SSs=1f(x(s)),x(s)P(x)Estimatorisunbiased:EP({x(s)})f=1SSs=1EP(x)[f(x)]=EP(x)[f(x)]Varianceshrinks∝1/S:varP({x(s)})f=1S2Ss=1varP(x)[f(x)]=varP(x)[f(x)]/S"Errorbars"shrinklike√SAdumbapproximationofπP(x,y)=10S=12;a=rand(S,2);4*mean(sum(a.

*a,2)S=1e7;a=rand(S,2);4*mean(sum(a.

*a,2)4*quadl(@(x)sqrt(1-x.

^2),0,1,tolerance)Givesπto6dp'sin108evaluations,machineprecisionin2598.

(NBMatlab'squadlfailsatzerotolerance)Otherlecturersarecoveringalternativesforhigherdimensions.

Noapprox.

integrationmethodalwaysworks.

SometimesMonteCarloisthebest.

Eye-ballingsamplesSometimessamplesarepleasingtolookat:(ifyou'reintogeometricalcombinatorics)FigurebyProppandWilson.

Source:MacKaytextbook.

Sanitycheckprobabilisticmodellingassumptions:DatasamplesMoBsamplesRBMsamplesMonteCarloandInsomniaEnricoFermi(1901–1954)tookgreatdelightinastonishinghiscolleagueswithhisremakablyaccuratepredictionsofexperimentalresults.

.

.

herevealedthathis"guesses"werereallyderivedfromthestatisticalsamplingtechniquesthatheusedtocalculatewithwheneverinsomniastruckintheweemorninghours!

—ThebeginningoftheMonteCarlomethod,N.

MetropolisSamplingfromaBayesnetAncestralpassfordirectedgraphicalmodels:—sampleeachtoplevelvariablefromitsmarginal—sampleeachothernodefromitsconditionalonceitsparentshavebeensampledSample:AP(A)BP(B)CP(C|A,B)DP(D|B,C)EP(D|C,D)P(A,B,C,D,E)=P(A)P(B)P(C|A,B)P(D|B,C)P(E|C,D)SamplingtheconditionalsUselibraryroutinesforunivariatedistributions(andsomeotherspecialcases)Thisbook(freeonline)explainshowsomeofthemworkhttp://cg.

scs.

carleton.

ca/~luc/rnbookindex.

htmlSamplingfromdistributionsDrawpointsuniformlyunderthecurve:ProbabilitymasstoleftofpointUniform[0,1]SamplingfromdistributionsHowtoconvertsamplesfromaUniform[0,1]generator:FigurefromPRML,Bishop(2006)h(y)=y∞p(y)dyDrawmasstoleftofpoint:uUniform[0,1]Sample,y(u)=h1(u)Althoughwecan'talwayscomputeandinverth(y)RejectionsamplingSamplingunderneathaP(x)∝P(x)curveisalsovalidDrawunderneathasimplecurvekQ(x)≥P(x):–DrawxQ(x)–heightuUniform[0,kQ(x)]DiscardthepointifaboveP,i.

e.

ifu>P(x)ImportancesamplingComputingP(x)andQ(x),thenthrowingxawayseemswastefulInsteadrewritetheintegralasanexpectationunderQ:f(x)P(x)dx=f(x)P(x)Q(x)Q(x)dx,(Q(x)>0ifP(x)>0)≈1SSs=1f(x(s))P(x(s))Q(x(s)),x(s)Q(x)ThisisjustsimpleMonteCarloagain,soitisunbiased.

Importancesamplingapplieswhentheintegralisnotanexpectation.

Divideandmultiplyanyintegrandbyaconvenientdistribution.

Importancesampling(2)PreviousslideassumedwecouldevaluateP(x)=P(x)/ZPf(x)P(x)dx≈ZQZP1SSs=1f(x(s))P(x(s))Q(x(s))r(s),x(s)Q(x)≈1SSs=1f(x(s))r(s)1Ssr(s)≡Ss=1f(x(s))w(s)ThisestimatorisconsistentbutbiasedExercise:ProvethatZP/ZQ≈1Ssr(s)SummarysofarSumsandintegrals,oftenexpectations,occurfrequentlyinstatisticsMonteCarloapproximatesexpectationswithasampleaverageRejectionsamplingdrawssamplesfromcomplexdistributionsImportancesamplingappliesMonteCarloto'any'sum/integralApplicationtolargeproblemsWeoftencan'tdecomposeP(X)intolow-dimensionalconditionalsUndirectedgraphicalmodels:P(x)=1Zifi(x)PosteriorofadirectedgraphicalmodelP(A,B,C,D|E)=P(A,B,C,D,E)P(E)Weoftendon'tknowZorP(E)ApplicationtolargeproblemsRejection&importancesamplingscalebadlywithdimensionalityExample:P(x)=N(0,I),Q(x)=N(0,σ2I)Rejectionsampling:Requiresσ≥1.

Fractionofproposalsaccepted=σDImportancesampling:Varianceofimportanceweights=σ221/σ2D/21Innite/undenedvarianceifσ≤1/√2Importancesamplingweightsw=0.

00548w=1.

59e-08w=9.

65e-06w=0.

371w=0.

103w=1.

01e-08w=0.

111w=1.

92e-09w=0.

0126w=1.

1e-51MetropolisalgorithmPerturbparameters:Q(θ;θ),e.

g.

N(θ,σ2)Acceptwithprobabilitymin1,P(θ|D)P(θ|D)OtherwisekeepoldparametersThissubgurefromPRML,Bishop(2006)Detail:Metropolis,asstated,requiresQ(θ;θ)=Q(θ;θ)MarkovchainMonteCarloConstructabiasedrandomwalkthatexplorestargetdistP(x)Markovsteps,xtT(xt←xt1)MCMCgivesapproximate,correlatedsamplesfromP(x)TransitionoperatorsDiscreteexampleP=3/51/51/5T=2/31/21/21/601/21/61/20Tij=T(xi←xj)PisaninvariantdistributionofTbecauseTP=P,i.

e.

xT(x←x)P(x)=P(x)AlsoPistheequilibriumdistributionofT:Tomachineprecision:T1000@1001A=0@3/51/51/51A=PErgodicityrequires:TK(x←x)>0forallx:P(x)>0,forsomeKDetailedBalanceDetailedbalancemeans→x→xand→x→xareequallyprobable:T(x←x)P(x)=T(x←x)P(x)Detailedbalanceimpliestheinvariantcondition:xT(x←x)P(x)=P(x)¨¨¨¨¨¨¨¨¨¨¨¨¨¨¨B1xT(x←x)Enforcingdetailedbalanceiseasy:itonlyinvolvesisolatedpairsReverseoperatorsIfTsatisesstationarity,wecandeneareverseoperatorT(x←x)∝T(x←x)P(x)=T(x←x)P(x)xT(x←x)P(x)=T(x←x)P(x)P(x)Generalizedbalancecondition:T(x←x)P(x)=T(x←x)P(x)alsoimpliestheinvariantconditionandisnecessary.

Operatorssatisfyingdetailedbalancearetheirownreverseoperator.

Metropolis–HastingsTransitionoperatorProposeamovefromthecurrentstateQ(x;x),e.

g.

N(x,σ2)Acceptwithprobabilitymin1,P(x)Q(x;x)P(x)Q(x;x)OtherwisenextstateinchainisacopyofcurrentstateNotesCanuseP∝P(x);normalizercancelsinacceptanceratioSatisesdetailedbalance(shownbelow)QmustbechosentofullltheothertechnicalrequirementsP(x)·T(x←x)=P(x)·Q(x;x)min1,P(x)Q(x;x)P(x)Q(x;x)!

=min"P(x)Q(x;x),P(x)Q(x;x)"=P(x)·Q(x;x)min1,P(x)Q(x;x)P(x)Q(x;x)!

=P(x)·T(x←x)Matlab/Octavecodefordemofunctionsamples=dumb_metropolis(init,log_ptilde,iters,sigma)D=numel(init);samples=zeros(D,iters);state=init;Lp_state=log_ptilde(state);forss=1:iters%Proposeprop=state+sigma*randn(size(state));Lp_prop=log_ptilde(prop);iflog(rand)<(Lp_prop-Lp_state)%Acceptstate=prop;Lp_state=Lp_prop;endsamples(:,ss)=state(:);endStep-sizedemoExploreN(0,1)withdierentstepsizesσsigma=@(s)plot(dumb_metropolis(0,@(x)-0.

5*x*x,1e3,s));sigma(0.

1)99.

8%acceptssigma(1)68.

4%acceptssigma(100)0.

5%acceptsMetropolislimitationsGenericproposalsuseQ(x;x)=N(x,σ2)σlarge→manyrejectionsσsmall→slowdiusion:(L/σ)2iterationsrequiredCombiningoperatorsAsequenceofoperators,eachwithPinvariant:x0P(x)x1Ta(x1←x0)x2Tb(x2←x1)x3Tc(x3←x2)···P(x1)=x0Ta(x1←x0)P(x0)=P(x1)P(x2)=x1Tb(x2←x1)P(x1)=P(x2)P(x3)=x1Tc(x3←x2)P(x2)=P(x3)···—CombinationTcTbTaleavesPinvariant—Iftheycanreachanyx,TcTbTaisavalidMCMCoperator—IndividuallyTc,TbandTaneednotbeergodicGibbssamplingAmethodwithnorejections:–Initializextosomevalue–PickeachvariableinturnorrandomlyandresampleP(xi|xj=i)FigurefromPRML,Bishop(2006)Proofofvalidity:a)checkdetailedbalanceforcomponentupdate.

b)Metropolis–Hastings'proposals'P(xi|xj=i)acceptwithprob.

1Applyaseriesoftheseoperators.

Don'tneedtocheckacceptance.

GibbssamplingAlternativeexplanation:ChainiscurrentlyatxAtequilibriumcanassumexP(x)Consistentwithxj=iP(xj=i),xiP(xi|xj=i)Pretendxiwasneversampledanddoitagain.

Thisviewmaybeusefullaterfornon-parametricapplications"Routine"GibbssamplingGibbssamplingbenetsfromfewfreechoicesandconvenientfeaturesofconditionaldistributions:Conditionalswithafewdiscretesettingscanbeexplicitlynormalized:P(xi|xj=i)∝P(xi,xj=i)=P(xi,xj=i)xiP(xi,xj=i)←thissumissmallandeasyContinuousconditionalsonlyunivariateamenabletostandardsamplingmethods.

WinBUGSandOpenBUGSsamplegraphicalmodelsusingthesetricksSummarysofarWeneedapproximatemethodstosolvesums/integralsMonteCarlodoesnotexplicitlydependondimension,althoughsimplemethodsworkonlyinlowdimensionsMarkovchainMonteCarlo(MCMC)canmakelocalmoves.

Byassumingless,it'smoreapplicabletohigherdimensionssimplecomputations"easy"toimplement(hardertodiagnose).

HowdoweusetheseMCMCsamplesEndofLecture1QuickreviewConstructabiasedrandomwalkthatexploresatargetdist.

Markovsteps,x(s)Tx(s)←x(s1)MCMCgivesapproximate,correlatedsamplesEP[f]≈1SSs=1f(x(s))Exampletransitions:Metropolis–Hastings:T(x←x)=Q(x;x)min1,P(x)Q(x;x)P(x)Q(x;x)Gibbssampling:Ti(x←x)=P(xi|xj=i)δ(xj=ixj=i)HowshouldwerunMCMCThesamplesaren'tindependent.

Shouldwethin,onlykeepeveryKthsampleArbitraryinitializationmeansstartingiterationsarebad.

Shouldwediscarda"burn-in"periodMaybeweshouldperformmultiplerunsHowdoweknowifwehaverunforlongenoughFormingestimatesApproximatelyindependentsamplescanbeobtainedbythinning.

However,allthesamplescanbeused.

UsethesimpleMonteCarloestimatoronMCMCsamples.

Itis:—consistent—unbiasedifthechainhas"burnedin"Thecorrectmotivationtothin:ifcomputingf(x(s))isexpensiveEmpiricaldiagnosticsRasmussen(2000)RecommendationsFordiagnostics:StandardsoftwarepackageslikeR-CODAForopiniononthinning,multipleruns,burnin,etc.

PracticalMarkovchainMonteCarloCharlesJ.

Geyer,StatisticalScience.

7(4):473–483,1992.

http://www.

jstor.

org/stable/2246094ConsistencychecksDoIgettherightanswerontinyversionsofmyproblemCanImakegoodinferencesaboutsyntheticdatadrawnfrommymodelGettingitright:jointdistributiontestsofposteriorsimulators,JohnGeweke,JASA,99(467):799–804,2004.

[next:usingthesamples]MakinggooduseofsamplesIsthestandardestimatortoonoisye.

g.

needmanysamplesfromadistributiontoestimateitstailWecanoftendosomeanalyticcalculationsFindingP(xi=1)Method1:fractionoftimexi=1P(xi=1)=xiI(xi=1)P(xi)≈1SSs=1I(x(s)i),x(s)iP(xi)Method2:averageofP(xi=1|x\i)P(xi=1)=x\iP(xi=1|x\i)P(x\i)≈1SSs=1P(xi=1|x(s)\i),x(s)\iP(x\i)Exampleof"Rao-Blackwellization".

Seealso"wasterecycling".

ProcessingsamplesThisiseasyI=xf(xi)P(x)≈1SSs=1f(x(s)i),x(s)P(x)ButthismightbebetterI=xf(xi)P(xi|x\i)P(x\i)=x\ixif(xi)P(xi|x\i)P(x\i)≈1SSs=1xif(xi)P(xi|x(s)\i),x(s)\iP(x\i)Amoregeneralformof"Rao-Blackwellization".

SummarysofarMCMCalgorithmsaregeneralandofteneasytoimplementRunningthemisabitmessy.

.

.

.

.

.

buttherearesomeestablishedprocedures.

GiventhesamplestheremightbeachoiceofestimatorsNextquestion:IsMCMCresearchallaboutndingagoodQ(x)AuxiliaryvariablesThepointofMCMCistomarginalizeoutvariables,butonecanintroducemorevariables:f(x)P(x)dx=f(x)P(x,v)dxdv≈1SSs=1f(x(s)),x,vP(x,v)WemightwanttodothisifP(x|v)andP(v|x)aresimpleP(x,v)isotherwiseeasiertonavigateSwendsen–Wang(1987)SeminalalgorithmusingauxiliaryvariablesEdwardsandSokal(1988)identiedandgeneralizedthe"Fortuin-Kasteleyn-Swendsen-Wang"auxiliaryvariablejointdistributionthatunderliesthealgorithm.

SlicesamplingideaSamplepointuniformlyundercurveP(x)∝P(x)p(u|x)=Uniform[0,P(x)]p(x|u)∝1P(x)≥u0otherwise="Uniformontheslice"SlicesamplingUnimodalconditionalsbracketslicesampleuniformlywithinbracketshrinkbracketifP(x)Neal(2003)containsmanyideas.

HamiltoniandynamicsConstructalandscapewithgravitationalpotentialenergy,E(x):P(x)∝eE(x),E(x)=logP(x)IntroducevelocityvcarryingkineticenergyK(v)=vv/2Somephysics:TotalenergyorHamiltonian,H=E(x)+K(v)Frictionlessballrolling(x,v)→(x,v)satisesH(x,v)=H(x,v)IdealHamiltoniandynamicsaretimereversible:–reversevandtheballwillreturntoitsstartpointHamiltonianMonteCarloDeneajointdistribution:P(x,v)∝eE(x)eK(v)=eE(x)K(v)=eH(x,v)VelocityisindependentofpositionandGaussiandistributedMarkovchainoperatorsGibbssamplevelocitySimulateHamiltoniandynamicsthenipsignofvelocity–Hamiltonian'proposal'isdeterministicandreversibleq(x,v;x,v)=q(x,v;x,v)=1–ConservationofenergymeansP(x,v)=P(x,v)–Metropolisacceptanceprobabilityis1Exceptwecan'tsimulateHamiltoniandynamicsexactlyLeap-frogdynamicsadiscreteapproximationtoHamiltoniandynamics:vi(t+2)=vi(t)2E(x(t))xixi(t+)=xi(t)+vi(t+2)pi(t+)=vi(t+2)2E(x(t+))xiHisnotconserveddynamicsarestilldeterministicandreversibleAcceptanceprobabilitybecomesmin[1,exp(H(v,x)H(v,x))]HamiltonianMonteCarloThealgorithm:GibbssamplevelocityN(0,I)SimulateLeapfrogdynamicsforLstepsAcceptnewpositionwithprobabilitymin[1,exp(H(v,x)H(v,x))]TheoriginalnameisHybridMonteCarlo,withreferencetothe"hybrid"dynamicalsimulationmethodonwhichitwasbased.

Summaryofauxiliaryvariables—Swendsen–Wang—Slicesampling—Hamiltonian(Hybrid)MonteCarloAfairamountofmyresearch(notcoveredinthistutorial)hasbeenndingtherightauxiliaryrepresentationonwhichtorunstandardMCMCupdates.

Examplebenets:PopulationmethodstogivebettermixingandexploitparallelhardwareBeingrobusttobadrandomnumbergeneratorsRemovingstep-sizeparameterswhenslicesampledoesn'treallyapplyFindingnormalizersishardPriorsampling:likendingfractionofneedlesinahay-stackP(D|M)=P(D|θ,M)P(θ|M)dθ=1SSs=1P(D|θ(s),M),θ(s)P(θ|M).

.

.

usuallyhashugevarianceSimilarlyforundirectedgraphs:P(x)=P(x)Z,Z=xP(x)Iwillusethisasaneasy-to-illustratecase-studyBenchmarkexperimentTrainingsetRBMsamplesMoBsamplesRBMsetup:—28*28=784binaryvisiblevariables—500binaryhiddenvariablesGoal:CompareP(x)ontestset,(PRBM(x)=P(x)/Z)SimpleImportanceSamplingZ=xP(x)Q(x)Q(x)≈1SSs=1P(x(s))Q(x),x(s)Q(x)x(1)=,x(2)=,x(3)=,x(4)=,x(5)=,x(6)=,.

.

.

Z=2Dx12DP(x)≈2DSSs=1P(x(s)),x(s)Uniform"Posterior"SamplingSamplefromP(x)=P(x)Z,orP(θ|D)=P(D|θ)P(θ)P(D)x(1)=,x(2)=,x(3)=,x(4)=,x(5)=,x(6)=,.

.

.

Z=xP(x)Z"≈"1SSs=1P(x)P(x)=ZFindingaVolume→x↓P(x)LakeanalogyandgurefromMacKaytextbook(2003)Annealing/Temperinge.

g.

P(x;β)∝P(x)βπ(x)(1β)β=0β=0.

01β=0.

1β=0.

25β=0.

5β=11/β="temperature"UsingotherdistributionsChainbetweenposteriorandprior:e.

g.

P(θ;β)=1Z(β)P(D|θ)βP(θ)β=0β=0.

01β=0.

1β=0.

25β=0.

5β=1Advantages:mixingeasieratlowβ,goodinitializationforhigherβZ(1)Z(0)=Z(β1)Z(0)·Z(β2)Z(β1)·Z(β3)Z(β2)·Z(β4)Z(β3)·Z(1)Z(β4)Relatedtoannealingortempering,1/β="temperature"ParalleltemperingNormalMCMCtransitions+swapproposalsonP(X)=βP(X;β)Problems/trade-os:obviousspacecostneedtoequilibriatelargersysteminformationfromlowβdiusesupbyslowrandomwalkTemperedtransitionsDrivetemperatureup.

.

.

.

.

.

andbackdownProposal:swaporderofpointssonalpointˇx0putativelyP(x)Acceptanceprobability:min1,Pβ1(x0)P(x0)···PβK(xK1)PβK1(x0)PβK1(ˇxK1)PβK(ˇxK1)···P(ˇx0)Pβ1(ˇx0)AnnealedImportanceSamplingP(X)=P(xK)ZKk=1Tk(xk1;xk),Q(X)=π(x0)Kk=1Tk(xk;xk1)ThenstandardimportancesamplingofP(X)=P(X)ZwithQ(X)AnnealedImportanceSamplingZ≈1SSs=1P(X)Q(X)Q↓↑PSummaryonZWhirlwindtourofroughlyhowtondZwithMonteCarloThealgorithmsreallyhavetobegoodatexploringthedistributionThesearealsotheMonteCarloapproachestowatchforgeneraluseonthehardestproblems.

Canbeusefulforoptimizationtoo.

Seethereferencesformore.

ReferencesFurtherreading(1/2)Generalreferences:ProbabilisticinferenceusingMarkovchainMonteCarlomethods,RadfordM.

Neal,Technicalreport:CRG-TR-93-1,DepartmentofComputerScience,UniversityofToronto,1993.

http://www.

cs.

toronto.

edu/~radford/review.

abstract.

htmlVariousguresandmorecamefrom(seealsoreferencestherein):AdvancesinMarkovchainMonteCarlomethods.

IainMurray.

2007.

http://www.

cs.

toronto.

edu/~murray/pub/07thesis/Informationtheory,inference,andlearningalgorithms.

DavidMacKay,2003.

http://www.

inference.

phy.

cam.

ac.

uk/mackay/itila/Patternrecognitionandmachinelearning.

ChristopherM.

Bishop.

2006.

http://research.

microsoft.

com/~cmbishop/PRML/Specicpoints:IfyoudoGibbssamplingwithcontinuousdistributionsthismethod,whichIomittedformaterial-overloadreasons,mayhelp:SuppressingrandomwalksinMarkovchainMonteCarlousingorderedoverrelaxation,RadfordM.

Neal,Learningingraphicalmodels,M.

I.

Jordan(editor),205–228,KluwerAcademicPublishers,1998.

http://www.

cs.

toronto.

edu/~radford/overk.

abstract.

htmlAnexampleofpickingestimatorscarefully:Speed-upofMonteCarlosimulationsbysamplingofrejectedstates,Frenkel,D,ProceedingsoftheNationalAcademyofSciences,101(51):17571–17575,TheNationalAcademyofSciences,2004.

http://www.

pnas.

org/cgi/content/abstract/101/51/17571Akeyreferenceforauxiliaryvariablemethodsis:GeneralizationsoftheFortuin-Kasteleyn-Swendsen-WangrepresentationandMonteCarloalgorithm,RobertG.

EdwardsandA.

D.

Sokal,PhysicalReview,38:2009–2012,1988.

Slicesampling,RadfordM.

Neal,AnnalsofStatistics,31(3):705–767,2003.

http://www.

cs.

toronto.

edu/~radford/slice-aos.

abstract.

htmlBayesiantrainingofbackpropagationnetworksbythehybridMonteCarlomethod,RadfordM.

Neal,Technicalreport:CRG-TR-92-1,ConnectionistResearchGroup,UniversityofToronto,1992.

http://www.

cs.

toronto.

edu/~radford/bbp.

abstract.

htmlAnearlyreferenceforparalleltempering:MarkovchainMonteCarlomaximumlikelihood,Geyer,C.

J,ComputingScienceandStatistics:Proceedingsofthe23rdSymposiumontheInterface,156–163,1991.

Samplingfrommultimodaldistributionsusingtemperedtransitions,RadfordM.

Neal,StatisticsandComputing,6(4):353–366,1996.

Furtherreading(2/2)Software:Gibbssamplingforgraphicalmodels:http://mathstat.

helsinki.

fi/openbugs/Neuralnetworksandotherexiblemodels:http://www.

cs.

utoronto.

ca/~radford/fbm.

software.

htmlCODA:http://www-s.

iarc.

fr/coda/OtherMonteCarlomethods:NestedsamplingisanewMonteCarlomethodwithsomeinterestingproperties:NestedsamplingforgeneralBayesiancomputation,JohnSkilling,BayesianAnalysis,2006.

(toappear,postedonlineJune5).

http://ba.

stat.

cmu.

edu/journal/forthcoming/skilling.

pdfApproachesbasedonthe"multi-canonicleensemble"alsosolvesomeoftheproblemswithtraditionaltempterature-basedmethods:Multicanonicalensemble:anewapproachtosimulaterst-orderphasetransitions,BerndA.

BergandThomasNeuhaus,Phys.

Rev.

Lett,68(1):9–12,1992.

http://prola.

aps.

org/abstract/PRL/v68/i1/p91Agoodreviewpaper:ExtendedEnsembleMonteCarlo.

YIba.

IntJModPhysC[ComputationalPhysicsandPhysicalComputation]12(5):623-656.

2001.

Particlelters/SequentialMonteCarloarefamouslysuccessfulintimeseriesmodelling,butaremoregenerallyapplicable.

Thismaybeagoodplacetostart:http://www.

cs.

ubc.

ca/~arnaud/journals.

htmlExactorperfectsamplingusesMarkovchainsimulationbutsuersnoinitializationbias.

Anamazingfeatwhenitcanbeperformed:AnnotatedbibliographyofperfectlyrandomsamplingwithMarkovchains,DavidB.

Wilsonhttp://dbwilson.

com/exact/MCMCdoesnotapplytodoubly-intractabledistributions.

Forwhatthatevenmeansandpossiblesolutionssee:AnecientMarkovchainMonteCarlomethodfordistributionswithintractablenormalisingconstants,J.

Mller,A.

N.

Pettitt,R.

ReevesandK.

K.

Berthelsen,Biometrika,93(2):451–458,2006.

MCMCfordoubly-intractabledistributions,IainMurray,ZoubinGhahramaniandDavidJ.

C.

MacKay,Proceedingsofthe22ndAnnualConferenceonUncertaintyinArticialIntelligence(UAI-06),RinaDechterandThomasS.

Richardson(editors),359–366,AUAIPress,2006.

http://www.

gatsby.

ucl.

ac.

uk/~iam23/pub/06doublyintractable/doublyintractable.

pdf

eng.

cam.

ac.

uk/mlss09/IainMurrayhttp://www.

cs.

toronto.

edu/~murray/AstatisticalproblemWhatistheaverageheightoftheMLSSlecturersMethod:measuretheirheights,addthemupanddividebyN=20.

WhatistheaverageheightfofpeoplepinCambridgeCEp∈C[f(p)]≡1|C|p∈Cf(p),"intractable"≈1SSs=1fp(s),forrandomsurveyofSpeople{p(s)}∈CSurveyingworksforlargeandnotionallyinnitepopulations.

SimpleMonteCarloStatisticalsamplingcanbeappliedtoanyexpectation:Ingeneral:f(x)P(x)dx≈1SSs=1f(x(s)),x(s)P(x)Example:makingpredictionsp(x|D)=P(x|θ,D)P(θ|D)dθ≈1SSs=1P(x|θ(s),D),θ(s)P(θ|D)Moreexamples:E-stepstatisticsinEM,BoltzmannmachinelearningPropertiesofMonteCarloEstimator:f(x)P(x)dx≈f≡1SSs=1f(x(s)),x(s)P(x)Estimatorisunbiased:EP({x(s)})f=1SSs=1EP(x)[f(x)]=EP(x)[f(x)]Varianceshrinks∝1/S:varP({x(s)})f=1S2Ss=1varP(x)[f(x)]=varP(x)[f(x)]/S"Errorbars"shrinklike√SAdumbapproximationofπP(x,y)=10S=12;a=rand(S,2);4*mean(sum(a.

*a,2)S=1e7;a=rand(S,2);4*mean(sum(a.

*a,2)4*quadl(@(x)sqrt(1-x.

^2),0,1,tolerance)Givesπto6dp'sin108evaluations,machineprecisionin2598.

(NBMatlab'squadlfailsatzerotolerance)Otherlecturersarecoveringalternativesforhigherdimensions.

Noapprox.

integrationmethodalwaysworks.

SometimesMonteCarloisthebest.

Eye-ballingsamplesSometimessamplesarepleasingtolookat:(ifyou'reintogeometricalcombinatorics)FigurebyProppandWilson.

Source:MacKaytextbook.

Sanitycheckprobabilisticmodellingassumptions:DatasamplesMoBsamplesRBMsamplesMonteCarloandInsomniaEnricoFermi(1901–1954)tookgreatdelightinastonishinghiscolleagueswithhisremakablyaccuratepredictionsofexperimentalresults.

.

.

herevealedthathis"guesses"werereallyderivedfromthestatisticalsamplingtechniquesthatheusedtocalculatewithwheneverinsomniastruckintheweemorninghours!

—ThebeginningoftheMonteCarlomethod,N.

MetropolisSamplingfromaBayesnetAncestralpassfordirectedgraphicalmodels:—sampleeachtoplevelvariablefromitsmarginal—sampleeachothernodefromitsconditionalonceitsparentshavebeensampledSample:AP(A)BP(B)CP(C|A,B)DP(D|B,C)EP(D|C,D)P(A,B,C,D,E)=P(A)P(B)P(C|A,B)P(D|B,C)P(E|C,D)SamplingtheconditionalsUselibraryroutinesforunivariatedistributions(andsomeotherspecialcases)Thisbook(freeonline)explainshowsomeofthemworkhttp://cg.

scs.

carleton.

ca/~luc/rnbookindex.

htmlSamplingfromdistributionsDrawpointsuniformlyunderthecurve:ProbabilitymasstoleftofpointUniform[0,1]SamplingfromdistributionsHowtoconvertsamplesfromaUniform[0,1]generator:FigurefromPRML,Bishop(2006)h(y)=y∞p(y)dyDrawmasstoleftofpoint:uUniform[0,1]Sample,y(u)=h1(u)Althoughwecan'talwayscomputeandinverth(y)RejectionsamplingSamplingunderneathaP(x)∝P(x)curveisalsovalidDrawunderneathasimplecurvekQ(x)≥P(x):–DrawxQ(x)–heightuUniform[0,kQ(x)]DiscardthepointifaboveP,i.

e.

ifu>P(x)ImportancesamplingComputingP(x)andQ(x),thenthrowingxawayseemswastefulInsteadrewritetheintegralasanexpectationunderQ:f(x)P(x)dx=f(x)P(x)Q(x)Q(x)dx,(Q(x)>0ifP(x)>0)≈1SSs=1f(x(s))P(x(s))Q(x(s)),x(s)Q(x)ThisisjustsimpleMonteCarloagain,soitisunbiased.

Importancesamplingapplieswhentheintegralisnotanexpectation.

Divideandmultiplyanyintegrandbyaconvenientdistribution.

Importancesampling(2)PreviousslideassumedwecouldevaluateP(x)=P(x)/ZPf(x)P(x)dx≈ZQZP1SSs=1f(x(s))P(x(s))Q(x(s))r(s),x(s)Q(x)≈1SSs=1f(x(s))r(s)1Ssr(s)≡Ss=1f(x(s))w(s)ThisestimatorisconsistentbutbiasedExercise:ProvethatZP/ZQ≈1Ssr(s)SummarysofarSumsandintegrals,oftenexpectations,occurfrequentlyinstatisticsMonteCarloapproximatesexpectationswithasampleaverageRejectionsamplingdrawssamplesfromcomplexdistributionsImportancesamplingappliesMonteCarloto'any'sum/integralApplicationtolargeproblemsWeoftencan'tdecomposeP(X)intolow-dimensionalconditionalsUndirectedgraphicalmodels:P(x)=1Zifi(x)PosteriorofadirectedgraphicalmodelP(A,B,C,D|E)=P(A,B,C,D,E)P(E)Weoftendon'tknowZorP(E)ApplicationtolargeproblemsRejection&importancesamplingscalebadlywithdimensionalityExample:P(x)=N(0,I),Q(x)=N(0,σ2I)Rejectionsampling:Requiresσ≥1.

Fractionofproposalsaccepted=σDImportancesampling:Varianceofimportanceweights=σ221/σ2D/21Innite/undenedvarianceifσ≤1/√2Importancesamplingweightsw=0.

00548w=1.

59e-08w=9.

65e-06w=0.

371w=0.

103w=1.

01e-08w=0.

111w=1.

92e-09w=0.

0126w=1.

1e-51MetropolisalgorithmPerturbparameters:Q(θ;θ),e.

g.

N(θ,σ2)Acceptwithprobabilitymin1,P(θ|D)P(θ|D)OtherwisekeepoldparametersThissubgurefromPRML,Bishop(2006)Detail:Metropolis,asstated,requiresQ(θ;θ)=Q(θ;θ)MarkovchainMonteCarloConstructabiasedrandomwalkthatexplorestargetdistP(x)Markovsteps,xtT(xt←xt1)MCMCgivesapproximate,correlatedsamplesfromP(x)TransitionoperatorsDiscreteexampleP=3/51/51/5T=2/31/21/21/601/21/61/20Tij=T(xi←xj)PisaninvariantdistributionofTbecauseTP=P,i.

e.

xT(x←x)P(x)=P(x)AlsoPistheequilibriumdistributionofT:Tomachineprecision:T1000@1001A=0@3/51/51/51A=PErgodicityrequires:TK(x←x)>0forallx:P(x)>0,forsomeKDetailedBalanceDetailedbalancemeans→x→xand→x→xareequallyprobable:T(x←x)P(x)=T(x←x)P(x)Detailedbalanceimpliestheinvariantcondition:xT(x←x)P(x)=P(x)¨¨¨¨¨¨¨¨¨¨¨¨¨¨¨B1xT(x←x)Enforcingdetailedbalanceiseasy:itonlyinvolvesisolatedpairsReverseoperatorsIfTsatisesstationarity,wecandeneareverseoperatorT(x←x)∝T(x←x)P(x)=T(x←x)P(x)xT(x←x)P(x)=T(x←x)P(x)P(x)Generalizedbalancecondition:T(x←x)P(x)=T(x←x)P(x)alsoimpliestheinvariantconditionandisnecessary.

Operatorssatisfyingdetailedbalancearetheirownreverseoperator.

Metropolis–HastingsTransitionoperatorProposeamovefromthecurrentstateQ(x;x),e.

g.

N(x,σ2)Acceptwithprobabilitymin1,P(x)Q(x;x)P(x)Q(x;x)OtherwisenextstateinchainisacopyofcurrentstateNotesCanuseP∝P(x);normalizercancelsinacceptanceratioSatisesdetailedbalance(shownbelow)QmustbechosentofullltheothertechnicalrequirementsP(x)·T(x←x)=P(x)·Q(x;x)min1,P(x)Q(x;x)P(x)Q(x;x)!

=min"P(x)Q(x;x),P(x)Q(x;x)"=P(x)·Q(x;x)min1,P(x)Q(x;x)P(x)Q(x;x)!

=P(x)·T(x←x)Matlab/Octavecodefordemofunctionsamples=dumb_metropolis(init,log_ptilde,iters,sigma)D=numel(init);samples=zeros(D,iters);state=init;Lp_state=log_ptilde(state);forss=1:iters%Proposeprop=state+sigma*randn(size(state));Lp_prop=log_ptilde(prop);iflog(rand)<(Lp_prop-Lp_state)%Acceptstate=prop;Lp_state=Lp_prop;endsamples(:,ss)=state(:);endStep-sizedemoExploreN(0,1)withdierentstepsizesσsigma=@(s)plot(dumb_metropolis(0,@(x)-0.

5*x*x,1e3,s));sigma(0.

1)99.

8%acceptssigma(1)68.

4%acceptssigma(100)0.

5%acceptsMetropolislimitationsGenericproposalsuseQ(x;x)=N(x,σ2)σlarge→manyrejectionsσsmall→slowdiusion:(L/σ)2iterationsrequiredCombiningoperatorsAsequenceofoperators,eachwithPinvariant:x0P(x)x1Ta(x1←x0)x2Tb(x2←x1)x3Tc(x3←x2)···P(x1)=x0Ta(x1←x0)P(x0)=P(x1)P(x2)=x1Tb(x2←x1)P(x1)=P(x2)P(x3)=x1Tc(x3←x2)P(x2)=P(x3)···—CombinationTcTbTaleavesPinvariant—Iftheycanreachanyx,TcTbTaisavalidMCMCoperator—IndividuallyTc,TbandTaneednotbeergodicGibbssamplingAmethodwithnorejections:–Initializextosomevalue–PickeachvariableinturnorrandomlyandresampleP(xi|xj=i)FigurefromPRML,Bishop(2006)Proofofvalidity:a)checkdetailedbalanceforcomponentupdate.

b)Metropolis–Hastings'proposals'P(xi|xj=i)acceptwithprob.

1Applyaseriesoftheseoperators.

Don'tneedtocheckacceptance.

GibbssamplingAlternativeexplanation:ChainiscurrentlyatxAtequilibriumcanassumexP(x)Consistentwithxj=iP(xj=i),xiP(xi|xj=i)Pretendxiwasneversampledanddoitagain.

Thisviewmaybeusefullaterfornon-parametricapplications"Routine"GibbssamplingGibbssamplingbenetsfromfewfreechoicesandconvenientfeaturesofconditionaldistributions:Conditionalswithafewdiscretesettingscanbeexplicitlynormalized:P(xi|xj=i)∝P(xi,xj=i)=P(xi,xj=i)xiP(xi,xj=i)←thissumissmallandeasyContinuousconditionalsonlyunivariateamenabletostandardsamplingmethods.

WinBUGSandOpenBUGSsamplegraphicalmodelsusingthesetricksSummarysofarWeneedapproximatemethodstosolvesums/integralsMonteCarlodoesnotexplicitlydependondimension,althoughsimplemethodsworkonlyinlowdimensionsMarkovchainMonteCarlo(MCMC)canmakelocalmoves.

Byassumingless,it'smoreapplicabletohigherdimensionssimplecomputations"easy"toimplement(hardertodiagnose).

HowdoweusetheseMCMCsamplesEndofLecture1QuickreviewConstructabiasedrandomwalkthatexploresatargetdist.

Markovsteps,x(s)Tx(s)←x(s1)MCMCgivesapproximate,correlatedsamplesEP[f]≈1SSs=1f(x(s))Exampletransitions:Metropolis–Hastings:T(x←x)=Q(x;x)min1,P(x)Q(x;x)P(x)Q(x;x)Gibbssampling:Ti(x←x)=P(xi|xj=i)δ(xj=ixj=i)HowshouldwerunMCMCThesamplesaren'tindependent.

Shouldwethin,onlykeepeveryKthsampleArbitraryinitializationmeansstartingiterationsarebad.

Shouldwediscarda"burn-in"periodMaybeweshouldperformmultiplerunsHowdoweknowifwehaverunforlongenoughFormingestimatesApproximatelyindependentsamplescanbeobtainedbythinning.

However,allthesamplescanbeused.

UsethesimpleMonteCarloestimatoronMCMCsamples.

Itis:—consistent—unbiasedifthechainhas"burnedin"Thecorrectmotivationtothin:ifcomputingf(x(s))isexpensiveEmpiricaldiagnosticsRasmussen(2000)RecommendationsFordiagnostics:StandardsoftwarepackageslikeR-CODAForopiniononthinning,multipleruns,burnin,etc.

PracticalMarkovchainMonteCarloCharlesJ.

Geyer,StatisticalScience.

7(4):473–483,1992.

http://www.

jstor.

org/stable/2246094ConsistencychecksDoIgettherightanswerontinyversionsofmyproblemCanImakegoodinferencesaboutsyntheticdatadrawnfrommymodelGettingitright:jointdistributiontestsofposteriorsimulators,JohnGeweke,JASA,99(467):799–804,2004.

[next:usingthesamples]MakinggooduseofsamplesIsthestandardestimatortoonoisye.

g.

needmanysamplesfromadistributiontoestimateitstailWecanoftendosomeanalyticcalculationsFindingP(xi=1)Method1:fractionoftimexi=1P(xi=1)=xiI(xi=1)P(xi)≈1SSs=1I(x(s)i),x(s)iP(xi)Method2:averageofP(xi=1|x\i)P(xi=1)=x\iP(xi=1|x\i)P(x\i)≈1SSs=1P(xi=1|x(s)\i),x(s)\iP(x\i)Exampleof"Rao-Blackwellization".

Seealso"wasterecycling".

ProcessingsamplesThisiseasyI=xf(xi)P(x)≈1SSs=1f(x(s)i),x(s)P(x)ButthismightbebetterI=xf(xi)P(xi|x\i)P(x\i)=x\ixif(xi)P(xi|x\i)P(x\i)≈1SSs=1xif(xi)P(xi|x(s)\i),x(s)\iP(x\i)Amoregeneralformof"Rao-Blackwellization".

SummarysofarMCMCalgorithmsaregeneralandofteneasytoimplementRunningthemisabitmessy.

.

.

.

.

.

buttherearesomeestablishedprocedures.

GiventhesamplestheremightbeachoiceofestimatorsNextquestion:IsMCMCresearchallaboutndingagoodQ(x)AuxiliaryvariablesThepointofMCMCistomarginalizeoutvariables,butonecanintroducemorevariables:f(x)P(x)dx=f(x)P(x,v)dxdv≈1SSs=1f(x(s)),x,vP(x,v)WemightwanttodothisifP(x|v)andP(v|x)aresimpleP(x,v)isotherwiseeasiertonavigateSwendsen–Wang(1987)SeminalalgorithmusingauxiliaryvariablesEdwardsandSokal(1988)identiedandgeneralizedthe"Fortuin-Kasteleyn-Swendsen-Wang"auxiliaryvariablejointdistributionthatunderliesthealgorithm.

SlicesamplingideaSamplepointuniformlyundercurveP(x)∝P(x)p(u|x)=Uniform[0,P(x)]p(x|u)∝1P(x)≥u0otherwise="Uniformontheslice"SlicesamplingUnimodalconditionalsbracketslicesampleuniformlywithinbracketshrinkbracketifP(x)

HamiltoniandynamicsConstructalandscapewithgravitationalpotentialenergy,E(x):P(x)∝eE(x),E(x)=logP(x)IntroducevelocityvcarryingkineticenergyK(v)=vv/2Somephysics:TotalenergyorHamiltonian,H=E(x)+K(v)Frictionlessballrolling(x,v)→(x,v)satisesH(x,v)=H(x,v)IdealHamiltoniandynamicsaretimereversible:–reversevandtheballwillreturntoitsstartpointHamiltonianMonteCarloDeneajointdistribution:P(x,v)∝eE(x)eK(v)=eE(x)K(v)=eH(x,v)VelocityisindependentofpositionandGaussiandistributedMarkovchainoperatorsGibbssamplevelocitySimulateHamiltoniandynamicsthenipsignofvelocity–Hamiltonian'proposal'isdeterministicandreversibleq(x,v;x,v)=q(x,v;x,v)=1–ConservationofenergymeansP(x,v)=P(x,v)–Metropolisacceptanceprobabilityis1Exceptwecan'tsimulateHamiltoniandynamicsexactlyLeap-frogdynamicsadiscreteapproximationtoHamiltoniandynamics:vi(t+2)=vi(t)2E(x(t))xixi(t+)=xi(t)+vi(t+2)pi(t+)=vi(t+2)2E(x(t+))xiHisnotconserveddynamicsarestilldeterministicandreversibleAcceptanceprobabilitybecomesmin[1,exp(H(v,x)H(v,x))]HamiltonianMonteCarloThealgorithm:GibbssamplevelocityN(0,I)SimulateLeapfrogdynamicsforLstepsAcceptnewpositionwithprobabilitymin[1,exp(H(v,x)H(v,x))]TheoriginalnameisHybridMonteCarlo,withreferencetothe"hybrid"dynamicalsimulationmethodonwhichitwasbased.

Summaryofauxiliaryvariables—Swendsen–Wang—Slicesampling—Hamiltonian(Hybrid)MonteCarloAfairamountofmyresearch(notcoveredinthistutorial)hasbeenndingtherightauxiliaryrepresentationonwhichtorunstandardMCMCupdates.

Examplebenets:PopulationmethodstogivebettermixingandexploitparallelhardwareBeingrobusttobadrandomnumbergeneratorsRemovingstep-sizeparameterswhenslicesampledoesn'treallyapplyFindingnormalizersishardPriorsampling:likendingfractionofneedlesinahay-stackP(D|M)=P(D|θ,M)P(θ|M)dθ=1SSs=1P(D|θ(s),M),θ(s)P(θ|M).

.

.

usuallyhashugevarianceSimilarlyforundirectedgraphs:P(x)=P(x)Z,Z=xP(x)Iwillusethisasaneasy-to-illustratecase-studyBenchmarkexperimentTrainingsetRBMsamplesMoBsamplesRBMsetup:—28*28=784binaryvisiblevariables—500binaryhiddenvariablesGoal:CompareP(x)ontestset,(PRBM(x)=P(x)/Z)SimpleImportanceSamplingZ=xP(x)Q(x)Q(x)≈1SSs=1P(x(s))Q(x),x(s)Q(x)x(1)=,x(2)=,x(3)=,x(4)=,x(5)=,x(6)=,.

.

.

Z=2Dx12DP(x)≈2DSSs=1P(x(s)),x(s)Uniform"Posterior"SamplingSamplefromP(x)=P(x)Z,orP(θ|D)=P(D|θ)P(θ)P(D)x(1)=,x(2)=,x(3)=,x(4)=,x(5)=,x(6)=,.

.

.

Z=xP(x)Z"≈"1SSs=1P(x)P(x)=ZFindingaVolume→x↓P(x)LakeanalogyandgurefromMacKaytextbook(2003)Annealing/Temperinge.

g.

P(x;β)∝P(x)βπ(x)(1β)β=0β=0.

01β=0.

1β=0.

25β=0.

5β=11/β="temperature"UsingotherdistributionsChainbetweenposteriorandprior:e.

g.

P(θ;β)=1Z(β)P(D|θ)βP(θ)β=0β=0.

01β=0.

1β=0.

25β=0.

5β=1Advantages:mixingeasieratlowβ,goodinitializationforhigherβZ(1)Z(0)=Z(β1)Z(0)·Z(β2)Z(β1)·Z(β3)Z(β2)·Z(β4)Z(β3)·Z(1)Z(β4)Relatedtoannealingortempering,1/β="temperature"ParalleltemperingNormalMCMCtransitions+swapproposalsonP(X)=βP(X;β)Problems/trade-os:obviousspacecostneedtoequilibriatelargersysteminformationfromlowβdiusesupbyslowrandomwalkTemperedtransitionsDrivetemperatureup.

.

.

.

.

.

andbackdownProposal:swaporderofpointssonalpointˇx0putativelyP(x)Acceptanceprobability:min1,Pβ1(x0)P(x0)···PβK(xK1)PβK1(x0)PβK1(ˇxK1)PβK(ˇxK1)···P(ˇx0)Pβ1(ˇx0)AnnealedImportanceSamplingP(X)=P(xK)ZKk=1Tk(xk1;xk),Q(X)=π(x0)Kk=1Tk(xk;xk1)ThenstandardimportancesamplingofP(X)=P(X)ZwithQ(X)AnnealedImportanceSamplingZ≈1SSs=1P(X)Q(X)Q↓↑PSummaryonZWhirlwindtourofroughlyhowtondZwithMonteCarloThealgorithmsreallyhavetobegoodatexploringthedistributionThesearealsotheMonteCarloapproachestowatchforgeneraluseonthehardestproblems.

Canbeusefulforoptimizationtoo.

Seethereferencesformore.

ReferencesFurtherreading(1/2)Generalreferences:ProbabilisticinferenceusingMarkovchainMonteCarlomethods,RadfordM.

Neal,Technicalreport:CRG-TR-93-1,DepartmentofComputerScience,UniversityofToronto,1993.

http://www.

cs.

toronto.

edu/~radford/review.

abstract.

htmlVariousguresandmorecamefrom(seealsoreferencestherein):AdvancesinMarkovchainMonteCarlomethods.

IainMurray.

2007.

http://www.

cs.

toronto.

edu/~murray/pub/07thesis/Informationtheory,inference,andlearningalgorithms.

DavidMacKay,2003.

http://www.

inference.

phy.

cam.

ac.

uk/mackay/itila/Patternrecognitionandmachinelearning.

ChristopherM.

Bishop.

2006.

http://research.

microsoft.

com/~cmbishop/PRML/Specicpoints:IfyoudoGibbssamplingwithcontinuousdistributionsthismethod,whichIomittedformaterial-overloadreasons,mayhelp:SuppressingrandomwalksinMarkovchainMonteCarlousingorderedoverrelaxation,RadfordM.

Neal,Learningingraphicalmodels,M.

I.

Jordan(editor),205–228,KluwerAcademicPublishers,1998.

http://www.

cs.

toronto.

edu/~radford/overk.

abstract.

htmlAnexampleofpickingestimatorscarefully:Speed-upofMonteCarlosimulationsbysamplingofrejectedstates,Frenkel,D,ProceedingsoftheNationalAcademyofSciences,101(51):17571–17575,TheNationalAcademyofSciences,2004.

http://www.

pnas.

org/cgi/content/abstract/101/51/17571Akeyreferenceforauxiliaryvariablemethodsis:GeneralizationsoftheFortuin-Kasteleyn-Swendsen-WangrepresentationandMonteCarloalgorithm,RobertG.

EdwardsandA.

D.

Sokal,PhysicalReview,38:2009–2012,1988.

Slicesampling,RadfordM.

Neal,AnnalsofStatistics,31(3):705–767,2003.

http://www.

cs.

toronto.

edu/~radford/slice-aos.

abstract.

htmlBayesiantrainingofbackpropagationnetworksbythehybridMonteCarlomethod,RadfordM.

Neal,Technicalreport:CRG-TR-92-1,ConnectionistResearchGroup,UniversityofToronto,1992.

http://www.

cs.

toronto.

edu/~radford/bbp.

abstract.

htmlAnearlyreferenceforparalleltempering:MarkovchainMonteCarlomaximumlikelihood,Geyer,C.

J,ComputingScienceandStatistics:Proceedingsofthe23rdSymposiumontheInterface,156–163,1991.

Samplingfrommultimodaldistributionsusingtemperedtransitions,RadfordM.

Neal,StatisticsandComputing,6(4):353–366,1996.

Furtherreading(2/2)Software:Gibbssamplingforgraphicalmodels:http://mathstat.

helsinki.

fi/openbugs/Neuralnetworksandotherexiblemodels:http://www.

cs.

utoronto.

ca/~radford/fbm.

software.

htmlCODA:http://www-s.

iarc.

fr/coda/OtherMonteCarlomethods:NestedsamplingisanewMonteCarlomethodwithsomeinterestingproperties:NestedsamplingforgeneralBayesiancomputation,JohnSkilling,BayesianAnalysis,2006.

(toappear,postedonlineJune5).

http://ba.

stat.

cmu.

edu/journal/forthcoming/skilling.

pdfApproachesbasedonthe"multi-canonicleensemble"alsosolvesomeoftheproblemswithtraditionaltempterature-basedmethods:Multicanonicalensemble:anewapproachtosimulaterst-orderphasetransitions,BerndA.

BergandThomasNeuhaus,Phys.

Rev.

Lett,68(1):9–12,1992.

http://prola.

aps.

org/abstract/PRL/v68/i1/p91Agoodreviewpaper:ExtendedEnsembleMonteCarlo.

YIba.

IntJModPhysC[ComputationalPhysicsandPhysicalComputation]12(5):623-656.

2001.

Particlelters/SequentialMonteCarloarefamouslysuccessfulintimeseriesmodelling,butaremoregenerallyapplicable.

Thismaybeagoodplacetostart:http://www.

cs.

ubc.

ca/~arnaud/journals.

htmlExactorperfectsamplingusesMarkovchainsimulationbutsuersnoinitializationbias.

Anamazingfeatwhenitcanbeperformed:AnnotatedbibliographyofperfectlyrandomsamplingwithMarkovchains,DavidB.

Wilsonhttp://dbwilson.

com/exact/MCMCdoesnotapplytodoubly-intractabledistributions.

Forwhatthatevenmeansandpossiblesolutionssee:AnecientMarkovchainMonteCarlomethodfordistributionswithintractablenormalisingconstants,J.

Mller,A.

N.

Pettitt,R.

ReevesandK.

K.

Berthelsen,Biometrika,93(2):451–458,2006.

MCMCfordoubly-intractabledistributions,IainMurray,ZoubinGhahramaniandDavidJ.

C.

MacKay,Proceedingsofthe22ndAnnualConferenceonUncertaintyinArticialIntelligence(UAI-06),RinaDechterandThomasS.

Richardson(editors),359–366,AUAIPress,2006.

http://www.

gatsby.

ucl.

ac.

uk/~iam23/pub/06doublyintractable/doublyintractable.

- detailedwindtour相关文档

- costwindtour

- limitedwindtour

- shimmeringwindtour

- imagewindtour

- windwindtour

- Alwindtour

星梦云:四川100G高防4H4G10M月付仅60元

星梦云怎么样?星梦云资质齐全,IDC/ISP均有,从星梦云这边租的服务器均可以备案,属于一手资源,高防机柜、大带宽、高防IP业务,一手整C IP段,四川电信,星梦云专注四川高防服务器,成都服务器,雅安服务器。星梦云目前夏日云服务器促销,四川100G高防4H4G10M月付仅60元;西南高防月付特价活动,续费同价,买到就是赚到!点击进入:星梦云官方网站地址1、成都电信年中活动机(成都电信优化线路,封锁...

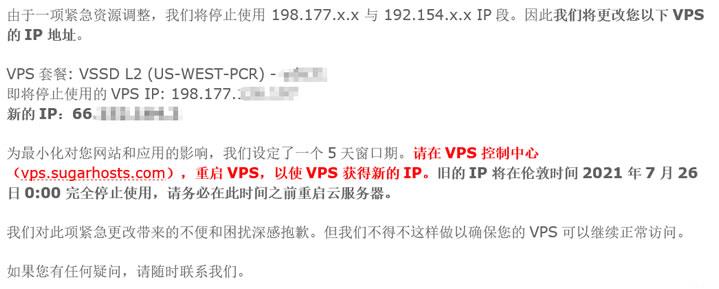

SugarHosts糖果主机商更换域名

昨天,遇到一个网友客户告知他的网站无法访问需要帮他检查到底是什么问题。这个同学的网站是我帮他搭建的,于是我先PING看到他的网站是不通的,开始以为是服务器是不是出现故障导致无法打开的。检查到他的服务器是有放在SugarHosts糖果主机商中,于是我登录他的糖果主机后台看到服务器是正常运行的。但是,我看到面板中的IP地址居然是和他网站解析的IP地址不同。看来官方是有更换域名。于是我就问 客服到底是什...

EdgeNat 新年开通优惠 - 韩国独立服务器原生IP地址CN2线路七折优惠

EdgeNat 商家在之前也有分享过几次活动,主要提供香港和韩国的VPS主机,分别在沙田和首尔LG机房,服务器均为自营硬件,电信CN2线路,移动联通BGP直连,其中VPS主机基于KVM架构,宿主机采用四路E5处理器、raid10+BBU固态硬盘!最高可以提供500Gbps DDoS防御。这次开年活动中有提供七折优惠的韩国独立服务器,原生IP地址CN2线路。第一、优惠券活动EdgeNat优惠码(限月...

windtour为你推荐

-

三星iphone支持ipad阿片类药物:您需要知道什么支持ipad重庆电信宽带管家电信的宽带上网助手是什么?csshackcss常见的hack方法有哪些googleadsense10分钟申请Google Adsense是一种怎样的体验迅雷雷鸟啊啊,想下载《看门狗》可13GB的大小,我每秒才450KB,我该怎么样才能大幅度地免费提高电脑下载win7还原系统电脑怎么恢复出厂设置win7旗舰版google搜图google自定义搜索是什么?怎么用