prototypeavmask.net

avmask.net 时间:2021-03-25 阅读:()

CRNet:Cross-ReferenceNetworksforFew-ShotSegmentationWeideLiu1,ChiZhang1,GuoshengLin1,FayaoLiu21NanyangTechnologicalUniversity,Singapore2A*Star,SingaporeE-mail:weide001@e.

ntu.

edu.

sg,chi007@e.

ntu.

edu.

sg,gslin@ntu.

edu.

sgAbstractOverthepastfewyears,state-of-the-artimagesegmen-tationalgorithmsarebasedondeepconvolutionalneuralnetworks.

Torenderadeepnetworkwiththeabilitytoun-derstandaconcept,humansneedtocollectalargeamountofpixel-levelannotateddatatotrainthemodels,whichistime-consumingandtedious.

Recently,few-shotsegmenta-tionisproposedtosolvethisproblem.

Few-shotsegmenta-tionaimstolearnasegmentationmodelthatcanbegener-alizedtonovelclasseswithonlyafewtrainingimages.

Inthispaper,weproposeacross-referencenetwork(CRNet)forfew-shotsegmentation.

Unlikepreviousworkswhichonlypredictthemaskinthequeryimage,ourproposedmodelconcurrentlymakepredictionsforboththesupportimageandthequeryimage.

Withacross-referencemecha-nism,ournetworkcanbetterndtheco-occurrentobjectsinthetwoimages,thushelpingthefew-shotsegmentationtask.

Wealsodevelopamaskrenementmoduletorecurrentlyre-nethepredictionoftheforegroundregions.

Forthek-shotlearning,weproposetonetunepartsofnetworkstotakeadvantageofmultiplelabeledsupportimages.

ExperimentsonthePASCALVOC2012datasetshowthatournetworkachievesstate-of-the-artperformance.

1.

IntroductionDeepneuralnetworkshavebeenwidelyappliedtovi-sualunderstandingtasks,e.

g.

,objectiondetection,seman-ticsegmentationandimagecaptioning,sincethehugesuc-cessinImageNetclassicationchallenge[4].

Duetoitsdata-drivingproperty,large-scalelabeleddatasetsareof-tenrequiredtoenablethetrainingofdeepmodels.

How-ever,collectinglabeleddatacanbenotoriouslyexpensiveintaskslikesemanticsegmentation,instancesegmentation,andvideosegmentation.

Moreover,datacollectingisusu-allyforasetofspeciccategories.

KnowledgelearnedinpreviousclassescanhardlybetransferredtounseenclassesCorrespondingauthor:G.

Lin(e-mail:gslin@ntu.

edu.

sg)Figure1.

ComparisonofourproposedCRNetagainstpreviouswork.

Previouswork(upperpart)unilaterallyguidethesegmen-tationofqueryimageswithsupportimages,whileinourCRNet(lowerpart)supportandqueryimagescanguidethesegmentationofeachother.

directly.

Directlynetuningthetrainedmodelsstillneedsalargeamountofnewlabeleddata.

Few-shotlearning,ontheotherhand,isproposedtosolvethisproblem.

Inthefew-shotlearningtasks,modelstrainedonprevioustasksareexpectedtogeneralizetounseentaskswithonlyafewlabeledtrainingimages.

Inthispaper,wetargetatfew-shotimagesegmentation.

Givenanovelobjectcategory,few-shotsegmentationaimstondtheforegroundregionsofthiscategoryonlyseeingafewlabeledexamples.

Manypreviousworksformulatethefew-shotsegmentationtaskasaguidedsegmentationtask.

Theguidanceinformationisextractedfromthelabeledsup-portsetfortheforegroundpredictioninthequeryimage,whichisusuallyachievedbyanunsymmetricaltwo-branchnetworkstructure.

Themodelisoptimizedwiththegroundtruthquerymaskasthesupervision.

Inourwork,wearguethattherolesofqueryandsup-portsetscanbeswitchedinafew-shotsegmentationmodel.

Specically,thesupportimagescanguidethepredictionof4165thequeryset,andconversely,thequeryimagecanalsohelpmakepredictionsofthesupportset.

Inspiredbytheimageco-segmentationliterature[7,12,1],weproposeasymmet-ricCross-ReferenceNetworkthattwoheadsconcurrentlymakepredictionsforboththequeryimageandthesupportimage.

ThedifferenceofthenetworkdesignwithpreviousworksisshowninFig.

1.

Thekeycomponentinournet-workdesignisthecross-referencemodulewhichgeneratesthereinforcedfeaturerepresentationsbycomparingtheco-occurrentfeaturesintwoimages.

Thereinforcedrepresen-tationsareusedforthedownstreamforegroundpredictionsintwoimages.

Inthemeantime,thecross-referencemod-ulealsomakespredictionsofco-occurrentobjectsinthetwoimages.

Thissub-taskprovidesanauxiliarylossinthetrainingphasetofacilitatethetrainingofthecross-referencemodule.

Asthereexistshugevarianceintheobjectappearance,miningforegroundregionsinimagescanbeamulti-stepprocess.

WedevelopaneffectiveMaskRenementModuletoiterativelyreneourpredictions.

Intheinitialprediction,thenetworkisexpectedtolocatehigh-condenceseedre-gions.

Then,thecondencemap,intheformofprobabilitymap,issavedasthecacheinthemoduleandisusedforlaterpredictions.

Weupdatethecacheeverytimewemakeanewprediction.

Afterrunningthemaskrenementmoduleforafewsteps,ourmodelcanbetterpredicttheforegroundre-gions.

Weempiricallydemonstratethatsuchalight-weightmodulecansignicantlyimprovetheperformance.

Whenitcomestothek-shotimagesegmentationwheremorethanonesupportimagesareprovided,previousmeth-odsoftenuse1-shotmodeltomakepredictionswitheachsupportimageindividuallyandfusetheirfeaturesorpre-dictedmasks.

Inourpaper,weproposetonetunepartsofournetworkwiththelabeledsupportexamples.

Asournetworkcanmakepredictionsforbothtwoimageinputsatatime,wecanuseatmostk2imagepairstonetuneournetwork.

Anadvantageofournetuningbasedmethodisthatitcanbenetfromtheincreasingnumberofsupportim-ages,andthusconsistentlyincreasestheaccuracy.

Incom-parison,thefusion-basedmethodscaneasilysaturatewhenmoresupportimagesareprovided.

Inourexperiment,wevalidateourmodelinthe1-shot,5-shot,and10-shotset-tings.

Themaincontributionsofthispaperarelistedasfollows:Weproposeanovelcross-referencenetworkthatcon-currentlymakespredictionsforboththequerysetandthesupportsetinthefew-shotimagesegmentationtask.

Byminingtheco-occurrentfeaturesintwoim-ages,ourproposednetworkcaneffectivelyimprovetheresults.

Wedevelopamaskrenementmodulewithcondencecachethatisabletorecurrentlyrenethepredictedre-sults.

Weproposeanetuningschemefork-shotlearning,whichturnsouttobeaneffectivesolutiontohandlemultiplesupportimages.

ExperimentsonthePASCALVOC2012demonstratethatourmethodsignicantlyoutperformsbaselinere-sultsandachievesnewstate-of-the-artperformanceonthe5-shotsegmentationtask.

2.

RelatedWork2.

1.

FewshotlearningFew-shotlearningaimstolearnamodelwhichcanbeeasilytransferredtonewtaskswithlimitedtrainingdataavailable.

Few-shotlearningiswidelyexploredinimageclassicationtasks.

Previousmethodscanberoughlydi-videdintotwocategoriesbasedonwhetherthemodelneedsnetuningatthetestingtime.

Innon-netunedmethods,parameterslearnedatthetrainingtimearekeptxedatthetestingstage.

Forexample,[19,22,21,24]aremetricbasedapproacheswhereanembeddingencoderandadistancemetricarelearnedtodeterminetheimagepairsimilarity.

Thesemethodshavetheadvantageoffastinferencewith-outfurtherparameteradaptions.

However,whenmultiplesupportimagesareavailable,theperformancecanbecomesaturateeasily.

Innetuningbasedmethods,themodelpa-rametersneedtobeadaptedtothenewtasksforpredictions.

Forexample,in[3],theydemonstratethatbyonlynetun-ingthefullyconnectedlayer,modelslearnedontrainingclassescanyieldstate-of-the-artfew-shotperformanceonnewclasses.

Inourwork,weuseanon-netunedfeed-forwardmodeltohandle1-shotlearningandadoptmodelnetuninginthek-shotsettingtobenetfrommultiplela-beledsupportimages.

Thetaskoffew-shotlearningisalsorelatedtoopensetproblem[20],wherethegoalisonlytodetectdatafromnovelclasses.

2.

2.

SegmentationSemanticsegmentationisafundamentalcomputervisiontaskwhichaimstoclassifyeachpixelintheimage.

State-of-the-artmethodsformulateimagesegmentationasadensepredictiontaskandadoptfullyconvolutionalnetworkstomakepredictions[2,11].

Usually,apre-trainedclassica-tionnetworkisusedasthenetworkbackbonebyremovingthefullyconnectedlayersattheend.

Tomakepixel-leveldensepredictions,encoder-decoderstructures[9,11]areoftenusedtoreconstructhigh-resolutionpredictionmaps.

Typicallyanencodergraduallydownsamplesthefeaturemaps,whichaimstoacquirelargeeld-of-viewandcaptureabstractfeaturerepresentations.

Then,thedecodergrad-uallyrecoversthene-grainedinformation.

Skipconnec-tionsareoftenusedtofusehigh-levelandlow-levelfea-4166turesforbetterpredictions.

Inournetwork,wealsofollowtheencoder-decoderdesignandopttotransfertheguidanceinformationinthelow-resolutionmapsandusedecoderstorecoverdetails.

2.

3.

Few-shotsegmentationFew-shotsegmentationisanaturalextensionoffew-shotclassicationtopixellevels.

SinceShabanetal.

[17]pro-posethistaskforthersttime,manydeeplearning-basedmethodsareproposed.

Mostpreviousworksformulatethefew-shotsegmentationasaguidedsegmentationtask.

Forexample,in[17],thesidebranchtakesthelabeledsupportimageastheinputandregressthenetworkparametersinthemainbranchtomakeforegroundpredictionsforthequeryimage.

In[26],theysharethesamespiritsandproposetofusetheembeddingsofthesupportbranchesintothequerybranchwithadensecomparisonmodule.

Dongetal.

[5]drawinspirationfromthesuccessofPrototypicalNetwork[19]infew-shotclassications,andproposeadenseprototypelearningwithEuclideandistanceasthemetricforsegmentationtasks.

Similarly,Zhangetal.

[27]proposeacosinesimilarityguidancenetworktoweightfea-turesfortheforegroundpredictionsinthequerybranch.

Therearesomepreviousworksusingrecurrentstructurestorenethesegmentationpredictions[6,26].

Allpreviousmethodsonlyusetheforegroundmaskinthequeryimageasthetrainingsupervision,whileinournetwork,thequerysetandthesupportsetguideeachotherandbothbranchesmakeforegroundpredictionsfortrainingsupervision.

2.

4.

Imageco-segmentationImageco-segmentationisawell-studiedtaskwhichaimstojointlysegmentthecommonobjectsinpairedimages.

Manyapproacheshavebeenproposedtosolvetheobjectco-segmentationproblem.

Rotheretetal.

[15]proposetominimizeanenergyfunctionofahistogrammatchingtermwithanMRFtoenforcesimilarforegroundstatistics.

Ru-binsteinetetal.

[16]capturethesparsityandvisualvari-abilityofthecommonobjectfrompairsofimageswithdensecorrespondences.

Joulinetal.

[7]solvethecommonobjectproblemwithanefcientconvexquadraticapprox-imationofenergywithdiscriminateclustering.

Sincetheprevalenceofdeepneuralnetworks,manydeeplearning-basedmethodshavebeenproposed.

In[12],themodelre-trievescommonobjectproposalswithaSiamesenetwork.

Chenetal.

[1]adoptchannelattentionstoweightfea-turesfortheco-segmentationtask.

Deeplearning-basedapproacheshavesignicantlyoutperformednon-learningbasedmethods.

3.

TaskDenitionFew-shotsegmentationaimstondtheforegroundpix-elsinthetestimagesgivenonlyafewpixel-levelannotatedimages.

Thetrainingandtestingofthemodelareconductedontwodatasetswithnooverlappedcategories.

Atboththetrainingandtestingstages,thelabeledexampleimagesarecalledthesupportset,whichservesasameta-trainingsetandtheunlabeledmeta-testingimageiscalledthequeryset.

Toguaranteeagoodgeneralizationperformanceattesttime,thetrainingandevaluationofthemodelareaccom-plishedbyepisodicallysamplingthesupportsetandthequeryset.

GivenanetworkRθparameterizedbyθ,ineachepisode,werstsampleatargetcategorycfromthedatasetC.

Basedonthesampledclass,wethensamplek+1labeledimages{(x1s,y1s),(x2s,y2s),.

.

.

(xks,yks),(xq,yq)}thatallcontainthesampledcategoryc.

Amongthem,therstklabeledimagesconstitutethesupportsetSandthelastoneisthequerysetQ.

Afterthat,wemakepredictionsonthequeryimagesbyinputtingthesupportsetandthequeryimageintothemodelyq=Rθ(S,xq).

Attrainingtime,welearnthemodelpa-rametersθbyoptimizingthecross-entropylossL(yq,yq),andrepeatsuchproceduresuntilconvergence.

4.

MethodInthissection,weintroducetheproposedcross-referencenetworkforsolvingfew-shotimagesegmenta-tion.

Inthebeginning,wedescribeournetworkinthe1-shotcase.

Afterthat,wedescribeournetuningschemeinthecaseofk-shotlearning.

Ournetworkincludesfourkeymodules:theSiameseencoder,thecross-referencemod-ule,theconditionmodule,andthemaskrenementmodule.

TheoverallarchitectureisshowninFig.

2.

4.

1.

MethodoverviewDifferentfrompreviousexistingfew-shotsegmentationmethods[26,17,5]unilaterallyguidethesegmentationofqueryimageswithsupportimages,ourproposedCRNeten-ablessupportandqueryimagesguidethesegmentationofeachother.

Wearguethattherelationshipbetweensupport-queryimagepairsisvitaltofew-shotsegmentationlearn-ing.

ExperimentsinTable2validatetheeffectivenessofournewarchitecturedesign.

AsshowninFigure2,ourmodellearnstoperformfew-shotsegmentationasfollows:foreveryquery-supportpair,weencodertheimagepairintodeepfeatureswiththeSiameseEncoder,thenapplythecross-referencemoduletomineoutco-occurrentobjectfeatures.

Tofullyutilizetheannotatedmask,theconditionalmodulewillincorporatethecategoryinformationofsupportsetannotationsforforegroundmaskpredictions,ourmaskrenemodulecachesthecondenceregionmapsrecur-rentlyfornalforegroundprediction.

Inthecaseofk-shotlearning,previousworks[27,26,17]onsimplyaveragetheresultsofdifferent1-shotpredictions,whileweadoptanoptimization-basedmethodthatnetunesthemodelto4167Figure2.

ThepipelineofourNetworkarchitecture.

OurNetworkmainlyconsistsofaSiameseencoder,across-referencemodule,acon-ditionmodule,andamaskrenementmodule.

Ournetworkadoptsasymmetricdesign.

TheSiameseencodermapsthequeryandsupportimagesintofeaturerepresentations.

Thecross-referencemoduleminestheco-occurrentfeaturesintwoimagestogeneratereinforcedrepresentations.

Theconditionmodulefusesthecategory-relevantfeaturevectorsintofeaturemapstoemphasizethetargetcategory.

Themaskrenementmodulesavesthecondencemapsofthelastpredictionintothecacheandrecurrentlyrenesthepredictedmasks.

makeuseofmoresupportdata.

Table4demonstratestheadvantagesofourmethodoverpreviousworks.

4.

2.

SiameseencoderTheSiameseencoderisapairofparameter-sharedcon-volutionalneuralnetworksthatencodethequeryimageandthesupportimagetofeaturemaps.

Unlikethemod-elsin[17,14],weuseasharedfeatureencodertoencodethesupportandthequeryimages.

Byembeddingtheim-agesintothesamespace,ourcross-referencemodulecanbettermineco-occurrentfeaturestolocatetheforegroundregions.

Toacquirerepresentativefeatureembeddings,weuseskipconnectionstoutilizemultiple-layerfeatures.

AsisobservedinCNNfeaturevisualizationliterature[26,23],featuresinlowerlayersoftenrelatetolowlevelcueandhigherlayersoftenrelatetosegmentcue,wecombinethelowerlevelfeaturesandhigherlevelfeaturesandpassingtofollowedmodules.

4.

3.

Cross-ReferenceModuleThecross-referencemoduleisdesignedtomineco-occurrentfeaturesintwoimagesandgenerateupdatedrep-resentations.

ThedesignofthemoduleisshowninFig.

3.

GiventwoinputfeaturemapsgeneratedbytheSiameseen-coder,werstuseglobalaveragepoolingtoacquiretheglobalstatisticsinthetwoimages.

Then,thetwofeaturevectorsaresenttoapairoftwo-layerfullyconnected(FC)layers,respectively.

TheSigmoidactivationfunctionat-tachedaftertheFClayertransformsthevectorvaluesintotheimportanceofthechannel,whichisintherangeof[0,1].

Afterthat,thevectorsinthetwobranchesarefusedbyelement-wisemultiplication.

Intuitively,onlythecommonfeaturesinthetwobrancheswillhaveahighactivationinthefusedimportancevector.

Finally,weusethefusedvec-tortoweighttheinputfeaturemapstogeneratereinforcedfeaturerepresentations.

Incomparisontotherawfeatures,thereinforcedfeaturesfocusmoreontheco-occurrentrep-resentations.

Basedonthereinforcedfeaturerepresentations,weadda4168Figure3.

Thecross-referencemodule.

Giventheinputfea-turemapsfromthesupportandthequerysets(Fs,Fq),thecross-referencemodulegeneratesupdatedfeaturerepresentations(Gs,Gq)byinspectingtheco-occurrentfeatures.

headtodirectlypredicttheco-occurrentobjectsinthetwoimagesduringtrainingtime.

Thissub-taskaimstofacil-itatethelearningoftheco-segmentationmoduletominebetterfeaturerepresentationsforthedownstreamtasks.

Togeneratethepredictionsoftheco-occurrentobjectsintwoimages,thereinforcedfeaturemapsinthetwobranchesaresenttoadecodertogeneratethepredictedmaps.

ThedecoderiscomposedofconvolutionallayerfollowedbyaASPP[2]layers,nally,aconvolutionallayergeneratesatwo-channelpredictioncorrespondingtotheforegroundandbackgroundscores.

4.

4.

ConditionModuleTofullyutilizethesupportsetannotations,wedesignaconditionmoduletoefcientlyincorporatethecategoryinformationforforegroundmaskpredictions.

Thecon-ditionmoduletakesthereinforcedfeaturerepresentationsgeneratedbythecross-referencemoduleandacategory-relevantvectorasinputs.

Thecategory-relevantvectoristhefusedfeatureembeddingsofthetargetcategory,whichisachievedbyapplyingforegroundaveragepooling[26]overthecategoryregion.

Asthegoalofthefew-shotsegmen-tationistoonlyndtheforegroundmaskoftheassignedobjectcategory,thetask-relevantvectorservesasacondi-tiontosegmentthetargetcategory.

Toachieveacategory-relevantembedding,previousworksopttolteroutthebackgroundregionsintheinputimages[14,17]orinthefeaturerepresentations[26,27].

Wechoosetodosobothinthefeaturelevelandintheinputimage.

Thecategory-relevantvectorisfusedwiththereinforcedfeaturemapsintheconditionmodulebybilinearlyupsamplingthevectortothesamespatialsizeofthefeaturemapsandconcatenatingthem.

Finally,weaddaresidualconvolutiontoprocesstheconcatenatedfeatures.

Thestructureoftheconditionmod-ulecanbefoundinFig.

4.

Theconditionmodulesinthesupportbranchandthequerybranchhavethesamestruc-tureandsharealltheparameters.

4.

5.

MaskRenementModuleAsisoftenobservedintheweaklysupervisedseman-ticsegmentationliterature[26,8],directlypredictingtheFigure4.

Theconditionmodule.

Ourconditionmodulefusesthecategory-relevantfeaturesintorepresentationsforbetterpredic-tionsofthetargetcategory.

objectmaskscanbedifcult.

Itisacommonprincipletorstlylocateseedregionsandthenrenetheresults.

Basedonsuchprinciple,wedesignamaskrenementmoduletorenethepredictedmaskstep-by-step.

Ourmotivationisthattheprobabilitymapsinasinglefeed-forwardpredic-tioncanreectwhereisthecondentregioninthemodelprediction.

Basedonthecondentregionsandtheimagefeatures,wecangraduallyoptimizethemaskandndthewholeobjectregions.

AsshowninFig.

5,ourmaskrene-mentmodulehastwoinputs.

Oneisthesavedcondencemapinthecacheandthesecondinputistheconcatenationoftheoutputsfromtheconditionmoduleandthecross-referencemodule.

Fortheinitialprediction,thecacheisinitializedwithazeromask,andthemodulemakespredic-tionssolelybasedontheinputfeaturemaps.

Themodulecacheisupdatedwiththegeneratedprobabilitymapeverytimethemodulemakesanewprediction.

Werunthismod-ulemultipletimestogenerateanalrenedmask.

Themaskrenementmoduleincludesthreemainblocks:thedownsampleblock,theglobalconvolutionblock,andthecombineblock.

TheDownsampleBlockdownsamplesthefeaturemapsbyafactorof2.

Thedownsampledfea-turesarethenupsampledtotheoriginalsizeandfusedwithfeaturesintheoppositebranch.

Theglobalconvolutionblock[13]aimstocapturefeaturesinalargeeld-of-viewwhilecontainingfewparameters.

Itincludestwogroupsof1*7and7*1convolutionalkernels.

Thecombineblockeffectivelyfusesthefeaturebranchandthecachedbranchtogeneraterenedfeaturerepresentations.

4.

6.

FinetuningforK-ShotLearningInthecaseofk-shotlearning,weproposetonetuneournetworktotakeadvantageofmultiplelabeledsupportim-ages.

Asournetworkcanmakepredictionsfortwoimagesatatime,wecanuseatmostk2imagepairstonetuneournetwork.

Attheevaluationstage,werandomlysam-pleanimagepairfromthelabeledsupportsettonetuneourmodel.

WekeeptheparametersintheSiameseencoderxedandonlynetunetherestmodules.

Inourexperiment,wedemonstratethatournetuningbasedmethodscancon-sistentlyimprovetheresultwhenmorelabeledsupportim-agesareavailable,whilethefusion-basedmethodsinprevi-ousworksoftengetsaturatedperformancewhenthenum-berofsupportimagesincreases.

4169Figure5.

Themaskrenementmodule.

Themodulesavesthegeneratedprobabilitymapfromthelaststepintothecacheandrecurrentlyoptimizesthepredictions.

5.

Experiment5.

1.

ImplementationDetailsIntheSiameseencoder,weexploitmulti-levelfeaturesfromtheImageNetpre-trainedResnet-50astheimagerep-resentations.

Weusedilatedconvolutionsandkeepthefea-turemapsafterlayer3andlayer4haveaxedsizeof1/8oftheinputimageandconcatenatethemfornalpredic-tion.

Alltheconvolutionallayersinourproposedmoduleshavethekernelsizeof3*3andgeneratefeaturesof256channels,followedbytheReLUactivationfunction.

Attesttime,werecurrentlyrunthemaskrenementmodulefor5timestorenethepredictedmasks.

Inthecaseofk-shotlearning,wextheSiameseencoderandnetunetherestparameters.

5.

2.

DatasetandEvaluationMetricWeimplementcross-validationexperimentsonthePAS-CALVOC2012datasettovalidateournetworkdesign.

Tocompareourmodelwithpreviousworks,weadoptthesamecategorydivisionsandtestsettingswhicharerstproposedin[17].

Inthecross-validationexperiments,20objectcat-egoriesareevenlydividedinto4folds,withthreefoldsasthetrainingclassesandonefoldasthetestingclasses.

ThecategorydivisionisshowninTable1.

Wereporttheav-erageperformanceover4testingfolds.

Fortheevaluationmetrics,weusethestandardmeanIntersection-over-Union(mIoU)oftheclassesinthetestingfold.

Formorede-tailsaboutthedatasetinformationandtheevaluationmet-ric,pleasereferto[17].

6.

AblationstudyThegoaloftheablationstudyistoinspecteachcompo-nentinournetworkdesign.

OurablationexperimentsareconductedonthePASCALVOCdataset.

Weimplementfoldcategories0aeroplane,bicycle,bird,boat,bottle1bus,car,cat,chair,cow2diningtable,dog,horse,motobike,person3pottedplant,sheep,sofa,train,tv/monitorTable1.

TheclassdivisionofthePASCALVOC2012datasetpro-posedin[17].

ConditionCross-ReferenceModule1-shot36.

343.

349.

1Table2.

Ablationstudyontheconditionmoduleandthecross-referencemodule.

Thecross-referencemodulebringsalargeper-formanceimprovementoverthebaselinemodel(Conditiononly).

Multi-LevelMaskReneMulti-Scale1-shot49.

150.

353.

455.

2Table3.

Ablationexperimentsonthemultiple-levelfeature,multiple-scaleinput,andtheMaskRenemodule.

Everymod-ulebringsperformanceimprovementoverthebaselinemodel.

cross-validation1-shotexperimentsandreporttheaverageperformanceoverthefoursplits.

InTable2,werstinvestigatethecontributionsofourtwoimportantnetworkcomponents:theconditionmod-uleandthecross-referencemodule.

Asshown,therearesignicantperformancedropsifweremoveeithercompo-nentfromthenetwork.

Particularly,ourproposedcross-referencemodulehasahugeimpactonthepredictions.

Our4170Figure6.

OurQualitativeexamplesonthePASCALVOCdataset.

Therstrowisthesupportsetandthesecondrowisthequeryset.

Thethirdrowisourpredictedresultsandthethefourthrowisthegroundtruth.

Evenwhenthequeryimagescontainobjectsfrommultipleclasses,ournetworkcanstillsuccessfullysegmentthetargetcategoryindicatedbythesupportmask.

Method1-shot5-shot10-shotFusion49.

150.

249.

9FinetuneN/A57.

559.

1Finetune+FusionN/A57.

658.

8Table4.

k-shotexperiments.

Wecompareournetuningbasedmethodwiththefusionmethod.

Ourmethodyieldsconsistentper-formanceimprovementwhenthenumberofsupportimagesin-creases.

Forthecaseof1-shot,netuneresultsarenotavailableasCRNetneedsatleasttwoimagestoapplyournetunescheme.

networkcanimprovethecounterpartmodelwithoutcross-referencemodulebymorethan10%.

Toinvestigatehowmuchthescalevarianceoftheob-jectsinuencethenetworkperformance,weadoptamulti-scaletestexperimentinournetwork.

Specically,atthetesttime,weresizethesupportimageandthequeryimageto[0.

75,1.

25]oftheoriginalimagesizeandconducttheinfer-MethodBackbonemIoUIoUOSLM[17]VGG1640.

861.

3co-fcn[14]VGG1641.

160.

9sg-one[27]VGG1646.

363.

1R-DRCN[18]VGG1640.

160.

9PL[5]VGG16-61.

2A-MCG[6]ResNet-50-61.

2CANet[26]ResNet-5055.

466.

2PGNet[25]ResNet-5056.

069.

9CRNetVGG1655.

266.

4CRNetResNet-5055.

766.

8Table5.

Comparisonwiththestate-of-the-artmethodsunderthe1-shotsetting.

Ourproposednetworkachievesstate-of-the-artper-formanceunderbothevaluationmetrics.

ence.

Theoutputpredictedmaskoftheresizedqueryimageisbilinearlyresizedtotheoriginalimagesize.

Wefusethepredictionsunderdifferentimagescales.

AsshowninTa-4171MethodBackbonemIoUIoUOSLM[17]VGG1643.

961.

5co-fcn[14]VGG1641.

460.

2sg-one[27]VGG1647.

165.

9R-DFCN[18]VGG1645.

366.

0PL[5]VGG16-62.

3A-MCG[6]ResNet-50-62.

2CANet[26]ResNet-5057.

169.

6PGNet[25]ResNet5058.

570.

5CRNetVGG1658.

571.

0CRNetResNet5058.

871.

5Table6.

Comparisonwiththestate-of-the-artmethodsunderthe5-shotsetting.

Ourproposednetworkoutperformsallpreviousmethodsandachievesnewstate-of-the-artperformanceunderbothevaluationmetrics.

ble3,multi-scaleinputtestbrings1.

2mIoUscoreimprove-mentinthe1-shotsetting.

WealsoinvestigatethechoicesoffeaturesinthenetworkbackboneinTable3.

Wecom-parethemulti-levelfeatureembeddingswiththefeaturessolelyfromthelastlayer.

Ourmodelwithmulti-levelfea-turesprovidesanimprovementof1.

8mIoUscore.

Thisindicatesthattobetterlocatethecommonobjectsintwoimages,middle-levelfeaturesarealsoimportantandhelp-ful.

Tofurtherinspecttheeffectivenessofthemaskrene-mentmodule,wedesignabaselinemodelthatremovesthecachedbranch.

Inthiscase,themaskrenementblockmakespredictionssolelybasedontheinputfeaturesandweonlyrunthemaskrenementmoduleonce.

AsshowninTable3,ourmaskrenementmodulebrings3.

1mIoUscoreperformanceincreaseoverourbaselinemethod.

Inthek-shotsetting,wecompareournetuningbasedmethodwiththefusion-basedmethodswidelyusedinpre-viousworks.

Forthefusion-basedmethod,wemakeanin-ferencewitheachofthesupportimagesandaveragetheirprobabilitymapsasthenalprediction.

ThecomparisonisshowninTable4.

Inthe5-shotsetting,thenetuningbasedmethodoutperforms1-shotbaselineby8.

4mIoUscore,whichissignicantlysuperiortothefusion-basedmethod.

When10supportimagesareavailable,ournetuningbasedmethodshowsmoreadvantages.

Theperformancecon-tinuesincreasingwhilethefusion-basedmethod'sperfor-mancebeginstodrop.

6.

1.

MSCOCOCOCO2014[10]isachallenginglarge-scaledataset,whichcontains80objectcategories.

Following[26],wechoose40classesfortraining,20classesforvalidationand20classesfortest.

AsshowninTable.

7,theresultsagainvalidatethedesignsinournetwork.

ConditionCross-ReferenceModuleMask-Rene1-shot5-shot43.

344.

038.

542.

744.

945.

645.

847.

2Table7.

Ablationstudyontheconditionmodulecross-referencemoduleandMask-renemoduleondatasetMSCOCO.

6.

2.

ComparisonwiththeState-of-the-ArtResultsWecompareournetworkwithstate-of-the-artmethodsonthePASCALVOC2012dataset.

Table5showstheper-formanceofdifferentmethodsinthe1-shotsetting.

WeuseIoUtodenotetheevaluationmetricproposedin[14].

ThedifferencebetweenthetwometricsisthattheIoUmetricalsoincorporatesthebackgroundintotheIntersection-over-Unioncomputationandignorestheimagecategory.

5-ShotExperiments.

Thecomparisonof5-shotseg-mentationresultsundertwoevaluationmetricsisshowninTable6.

Ourmethodachievesnewstate-of-the-artperfor-manceunderbothevaluationmetrics.

7.

ConclusionInthispaper,wehavepresentedanovelcross-referencenetworkforfew-shotsegmentation.

Unlikepreviousworkunilaterallyguidingthesegmentationofqueryimageswithsupportimages,ourtwo-headdesignconcurrentlymakespredictionsinboththequeryimageandthesupportimagetohelpthenetworkbetterlocatethetargetcategory.

Wedevelopamaskrenementmodulewithacachemecha-nismwhichcaneffectivelyimprovethepredictionperfor-mance.

Inthek-shotsetting,ournetuningbasedmethodcantakeadvantageofmoreannotateddataandsignicantlyimprovestheperformance.

ExtensiveablationexperimentsonPASCALVOC2012datasetvalidatetheeffectivenessofourdesign.

Ourmodelachievesstate-of-the-artperfor-manceonthePASCALVOC2012dataset.

AcknowledgementsThisresearchissupportedbytheNationalResearchFoundationSingaporeunderitsAISingaporeProgramme(AwardNumber:AISG-RP-2018-003)andtheMOETier-1researchgrants:RG126/17(S)andRG22/19(S).

Thisre-searchisalsopartlysupportedbytheDelta-NTUCorporateLabwithfundingsupportfromDeltaElectronicsInc.

andtheNationalResearchFoundation(NRF)Singapore.

References[1]HongChen,YifeiHuang,andHidekiNakayama.

Semanticawareattentionbaseddeepobjectco-segmentation.

arXivpreprintarXiv:1810.

06859,2018.

2,3[2]Liang-ChiehChen,GeorgePapandreou,IasonasKokkinos,KevinMurphy,andAlanLYuille.

Deeplab:Semanticimage4172segmentationwithdeepconvolutionalnets,atrousconvolu-tion,andfullyconnectedcrfs.

IEEEtransactionsonpatternanalysisandmachineintelligence,40(4):834–848,2018.

2,5[3]Wei-YuChen,Yen-ChengLiu,ZsoltKira,Yu-ChiangWang,andJia-BinHuang.

Acloserlookatfew-shotclassication.

InInternationalConferenceonLearningRepresentations,2019.

2[4]JiaDeng,WeiDong,RichardSocher,Li-JiaLi,KaiLi,andLiFei-Fei.

Imagenet:Alarge-scalehierarchicalimagedatabase.

InCVPR,pages248–255,2009.

1[5]NanqingDongandEricXing.

Few-shotsemanticsegmenta-tionwithprototypelearning.

InBMVC,2018.

3,7,8[6]TaoHu,PengwanYang,ChiliangZhang,GangYu,YadongMu,andCeesGMSnoek.

Attention-basedmulti-contextguidingforfew-shotsemanticsegmentation.

2019.

3,7,8[7]ArmandJoulin,FrancisBach,andJeanPonce.

Multi-classcosegmentation.

In2012IEEEConferenceonComputerVi-sionandPatternRecognition,pages542–549.

IEEE,2012.

2,3[8]AlexanderKolesnikovandChristophHLampert.

Seed,ex-pandandconstrain:Threeprinciplesforweakly-supervisedimagesegmentation.

InEuropeanConferenceonComputerVision,pages695–711.

Springer,2016.

5[9]GuoshengLin,AntonMilan,ChunhuaShen,andIanDReid.

Renenet:Multi-pathrenementnetworksforhigh-resolutionsemanticsegmentation.

InCVPR,volume1,page5,2017.

2[10]Tsung-YiLin,MichaelMaire,SergeBelongie,JamesHays,PietroPerona,DevaRamanan,PiotrDollar,andCLawrenceZitnick.

Microsoftcoco:Commonobjectsincontext.

InECCV,pages740–755,2014.

8[11]JonathanLong,EvanShelhamer,andTrevorDarrell.

Fullyconvolutionalnetworksforsemanticsegmentation.

InPro-ceedingsoftheIEEEconferenceoncomputervisionandpat-ternrecognition,pages3431–3440,2015.

2[12]PreranaMukherjee,BrejeshLall,andSnehithLattupally.

Objectcosegmentationusingdeepsiamesenetwork.

arXivpreprintarXiv:1803.

02555,2018.

2,3[13]ChaoPeng,XiangyuZhang,GangYu,GuimingLuo,andJianSun.

Largekernelmatters–improvesemanticsegmen-tationbyglobalconvolutionalnetwork.

InProceedingsoftheIEEEconferenceoncomputervisionandpatternrecog-nition,pages4353–4361,2017.

5[14]KateRakelly,EvanShelhamer,TrevorDarrell,AlyoshaEfros,andSergeyLevine.

Conditionalnetworksforfew-shotsemanticsegmentation.

InICLRWorkshop,2018.

4,5,7,8[15]CarstenRother,TomMinka,AndrewBlake,andVladimirKolmogorov.

Cosegmentationofimagepairsbyhistogrammatching-incorporatingaglobalconstraintintomrfs.

In2006IEEEComputerSocietyConferenceonComputerVi-sionandPatternRecognition(CVPR'06),volume1,pages993–1000.

IEEE,2006.

3[16]MichaelRubinstein,ArmandJoulin,JohannesKopf,andCeLiu.

Unsupervisedjointobjectdiscoveryandsegmentationininternetimages.

InProceedingsoftheIEEEconferenceoncomputervisionandpatternrecognition,pages1939–1946,2013.

3[17]AmirrezaShaban,ShrayBansal,ZhenLiu,IrfanEssa,andByronBoots.

One-shotlearningforsemanticsegmentation.

arXivpreprintarXiv:1709.

03410,2017.

3,4,5,6,7,8[18]MennatullahSiamandBorisOreshkin.

Adaptivemaskedweightimprintingforfew-shotsegmentation.

arXivpreprintarXiv:1902.

11123,2019.

7,8[19]JakeSnell,KevinSwersky,andRichardZemel.

Prototypicalnetworksforfew-shotlearning.

InNIPS,2017.

2,3[20]XinSun,ZhenningYang,ChiZhang,GuohaoPeng,andKeck-VoonLing.

Conditionalgaussiandistributionlearningforopensetrecognition,2020.

2[21]OriolVinyals,CharlesBlundell,TimothyLillicrap,DaanWierstra,etal.

Matchingnetworksforoneshotlearning.

InAdvancesinneuralinformationprocessingsystems,pages3630–3638,2016.

2[22]FloodSungYongxinYang,LiZhang,TaoXiang,PhilipHSTorr,andTimothyMHospedales.

Learningtocompare:Re-lationnetworkforfew-shotlearning.

InCVPR,2018.

2[23]JasonYosinski,JeffClune,AnhNguyen,ThomasFuchs,andHodLipson.

Understandingneuralnetworksthroughdeepvisualization.

arXivpreprintarXiv:1506.

06579,2015.

4[24]ChiZhang,YujunCai,GuoshengLin,andChunhuaShen.

Deepemd:Few-shotimageclassicationwithdifferentiableearthmover'sdistanceandstructuredclassiers,2020.

2[25]ChiZhang,GuoshengLin,FayaoLiu,JiushuangGuo,QingyaoWu,andRuiYao.

Pyramidgraphnetworkswithconnectionattentionsforregion-basedone-shotsemanticsegmentation.

InProceedingsoftheIEEEInternationalConferenceonComputerVision,pages9587–9595,2019.

7,8[26]ChiZhang,GuoshengLin,FayaoLiu,RuiYao,andChunhuaShen.

Canet:Class-agnosticsegmentationnetworkswithit-erativerenementandattentivefew-shotlearning.

InPro-ceedingsoftheIEEEConferenceonComputerVisionandPatternRecognition,pages5217–5226,2019.

3,4,5,7,8[27]XiaolinZhang,YunchaoWei,YiYang,andThomasHuang.

Sg-one:Similarityguidancenetworkforone-shotsemanticsegmentation.

arXivpreprintarXiv:1810.

09091,2018.

3,5,7,84173

ntu.

edu.

sg,chi007@e.

ntu.

edu.

sg,gslin@ntu.

edu.

sgAbstractOverthepastfewyears,state-of-the-artimagesegmen-tationalgorithmsarebasedondeepconvolutionalneuralnetworks.

Torenderadeepnetworkwiththeabilitytoun-derstandaconcept,humansneedtocollectalargeamountofpixel-levelannotateddatatotrainthemodels,whichistime-consumingandtedious.

Recently,few-shotsegmenta-tionisproposedtosolvethisproblem.

Few-shotsegmenta-tionaimstolearnasegmentationmodelthatcanbegener-alizedtonovelclasseswithonlyafewtrainingimages.

Inthispaper,weproposeacross-referencenetwork(CRNet)forfew-shotsegmentation.

Unlikepreviousworkswhichonlypredictthemaskinthequeryimage,ourproposedmodelconcurrentlymakepredictionsforboththesupportimageandthequeryimage.

Withacross-referencemecha-nism,ournetworkcanbetterndtheco-occurrentobjectsinthetwoimages,thushelpingthefew-shotsegmentationtask.

Wealsodevelopamaskrenementmoduletorecurrentlyre-nethepredictionoftheforegroundregions.

Forthek-shotlearning,weproposetonetunepartsofnetworkstotakeadvantageofmultiplelabeledsupportimages.

ExperimentsonthePASCALVOC2012datasetshowthatournetworkachievesstate-of-the-artperformance.

1.

IntroductionDeepneuralnetworkshavebeenwidelyappliedtovi-sualunderstandingtasks,e.

g.

,objectiondetection,seman-ticsegmentationandimagecaptioning,sincethehugesuc-cessinImageNetclassicationchallenge[4].

Duetoitsdata-drivingproperty,large-scalelabeleddatasetsareof-tenrequiredtoenablethetrainingofdeepmodels.

How-ever,collectinglabeleddatacanbenotoriouslyexpensiveintaskslikesemanticsegmentation,instancesegmentation,andvideosegmentation.

Moreover,datacollectingisusu-allyforasetofspeciccategories.

KnowledgelearnedinpreviousclassescanhardlybetransferredtounseenclassesCorrespondingauthor:G.

Lin(e-mail:gslin@ntu.

edu.

sg)Figure1.

ComparisonofourproposedCRNetagainstpreviouswork.

Previouswork(upperpart)unilaterallyguidethesegmen-tationofqueryimageswithsupportimages,whileinourCRNet(lowerpart)supportandqueryimagescanguidethesegmentationofeachother.

directly.

Directlynetuningthetrainedmodelsstillneedsalargeamountofnewlabeleddata.

Few-shotlearning,ontheotherhand,isproposedtosolvethisproblem.

Inthefew-shotlearningtasks,modelstrainedonprevioustasksareexpectedtogeneralizetounseentaskswithonlyafewlabeledtrainingimages.

Inthispaper,wetargetatfew-shotimagesegmentation.

Givenanovelobjectcategory,few-shotsegmentationaimstondtheforegroundregionsofthiscategoryonlyseeingafewlabeledexamples.

Manypreviousworksformulatethefew-shotsegmentationtaskasaguidedsegmentationtask.

Theguidanceinformationisextractedfromthelabeledsup-portsetfortheforegroundpredictioninthequeryimage,whichisusuallyachievedbyanunsymmetricaltwo-branchnetworkstructure.

Themodelisoptimizedwiththegroundtruthquerymaskasthesupervision.

Inourwork,wearguethattherolesofqueryandsup-portsetscanbeswitchedinafew-shotsegmentationmodel.

Specically,thesupportimagescanguidethepredictionof4165thequeryset,andconversely,thequeryimagecanalsohelpmakepredictionsofthesupportset.

Inspiredbytheimageco-segmentationliterature[7,12,1],weproposeasymmet-ricCross-ReferenceNetworkthattwoheadsconcurrentlymakepredictionsforboththequeryimageandthesupportimage.

ThedifferenceofthenetworkdesignwithpreviousworksisshowninFig.

1.

Thekeycomponentinournet-workdesignisthecross-referencemodulewhichgeneratesthereinforcedfeaturerepresentationsbycomparingtheco-occurrentfeaturesintwoimages.

Thereinforcedrepresen-tationsareusedforthedownstreamforegroundpredictionsintwoimages.

Inthemeantime,thecross-referencemod-ulealsomakespredictionsofco-occurrentobjectsinthetwoimages.

Thissub-taskprovidesanauxiliarylossinthetrainingphasetofacilitatethetrainingofthecross-referencemodule.

Asthereexistshugevarianceintheobjectappearance,miningforegroundregionsinimagescanbeamulti-stepprocess.

WedevelopaneffectiveMaskRenementModuletoiterativelyreneourpredictions.

Intheinitialprediction,thenetworkisexpectedtolocatehigh-condenceseedre-gions.

Then,thecondencemap,intheformofprobabilitymap,issavedasthecacheinthemoduleandisusedforlaterpredictions.

Weupdatethecacheeverytimewemakeanewprediction.

Afterrunningthemaskrenementmoduleforafewsteps,ourmodelcanbetterpredicttheforegroundre-gions.

Weempiricallydemonstratethatsuchalight-weightmodulecansignicantlyimprovetheperformance.

Whenitcomestothek-shotimagesegmentationwheremorethanonesupportimagesareprovided,previousmeth-odsoftenuse1-shotmodeltomakepredictionswitheachsupportimageindividuallyandfusetheirfeaturesorpre-dictedmasks.

Inourpaper,weproposetonetunepartsofournetworkwiththelabeledsupportexamples.

Asournetworkcanmakepredictionsforbothtwoimageinputsatatime,wecanuseatmostk2imagepairstonetuneournetwork.

Anadvantageofournetuningbasedmethodisthatitcanbenetfromtheincreasingnumberofsupportim-ages,andthusconsistentlyincreasestheaccuracy.

Incom-parison,thefusion-basedmethodscaneasilysaturatewhenmoresupportimagesareprovided.

Inourexperiment,wevalidateourmodelinthe1-shot,5-shot,and10-shotset-tings.

Themaincontributionsofthispaperarelistedasfollows:Weproposeanovelcross-referencenetworkthatcon-currentlymakespredictionsforboththequerysetandthesupportsetinthefew-shotimagesegmentationtask.

Byminingtheco-occurrentfeaturesintwoim-ages,ourproposednetworkcaneffectivelyimprovetheresults.

Wedevelopamaskrenementmodulewithcondencecachethatisabletorecurrentlyrenethepredictedre-sults.

Weproposeanetuningschemefork-shotlearning,whichturnsouttobeaneffectivesolutiontohandlemultiplesupportimages.

ExperimentsonthePASCALVOC2012demonstratethatourmethodsignicantlyoutperformsbaselinere-sultsandachievesnewstate-of-the-artperformanceonthe5-shotsegmentationtask.

2.

RelatedWork2.

1.

FewshotlearningFew-shotlearningaimstolearnamodelwhichcanbeeasilytransferredtonewtaskswithlimitedtrainingdataavailable.

Few-shotlearningiswidelyexploredinimageclassicationtasks.

Previousmethodscanberoughlydi-videdintotwocategoriesbasedonwhetherthemodelneedsnetuningatthetestingtime.

Innon-netunedmethods,parameterslearnedatthetrainingtimearekeptxedatthetestingstage.

Forexample,[19,22,21,24]aremetricbasedapproacheswhereanembeddingencoderandadistancemetricarelearnedtodeterminetheimagepairsimilarity.

Thesemethodshavetheadvantageoffastinferencewith-outfurtherparameteradaptions.

However,whenmultiplesupportimagesareavailable,theperformancecanbecomesaturateeasily.

Innetuningbasedmethods,themodelpa-rametersneedtobeadaptedtothenewtasksforpredictions.

Forexample,in[3],theydemonstratethatbyonlynetun-ingthefullyconnectedlayer,modelslearnedontrainingclassescanyieldstate-of-the-artfew-shotperformanceonnewclasses.

Inourwork,weuseanon-netunedfeed-forwardmodeltohandle1-shotlearningandadoptmodelnetuninginthek-shotsettingtobenetfrommultiplela-beledsupportimages.

Thetaskoffew-shotlearningisalsorelatedtoopensetproblem[20],wherethegoalisonlytodetectdatafromnovelclasses.

2.

2.

SegmentationSemanticsegmentationisafundamentalcomputervisiontaskwhichaimstoclassifyeachpixelintheimage.

State-of-the-artmethodsformulateimagesegmentationasadensepredictiontaskandadoptfullyconvolutionalnetworkstomakepredictions[2,11].

Usually,apre-trainedclassica-tionnetworkisusedasthenetworkbackbonebyremovingthefullyconnectedlayersattheend.

Tomakepixel-leveldensepredictions,encoder-decoderstructures[9,11]areoftenusedtoreconstructhigh-resolutionpredictionmaps.

Typicallyanencodergraduallydownsamplesthefeaturemaps,whichaimstoacquirelargeeld-of-viewandcaptureabstractfeaturerepresentations.

Then,thedecodergrad-uallyrecoversthene-grainedinformation.

Skipconnec-tionsareoftenusedtofusehigh-levelandlow-levelfea-4166turesforbetterpredictions.

Inournetwork,wealsofollowtheencoder-decoderdesignandopttotransfertheguidanceinformationinthelow-resolutionmapsandusedecoderstorecoverdetails.

2.

3.

Few-shotsegmentationFew-shotsegmentationisanaturalextensionoffew-shotclassicationtopixellevels.

SinceShabanetal.

[17]pro-posethistaskforthersttime,manydeeplearning-basedmethodsareproposed.

Mostpreviousworksformulatethefew-shotsegmentationasaguidedsegmentationtask.

Forexample,in[17],thesidebranchtakesthelabeledsupportimageastheinputandregressthenetworkparametersinthemainbranchtomakeforegroundpredictionsforthequeryimage.

In[26],theysharethesamespiritsandproposetofusetheembeddingsofthesupportbranchesintothequerybranchwithadensecomparisonmodule.

Dongetal.

[5]drawinspirationfromthesuccessofPrototypicalNetwork[19]infew-shotclassications,andproposeadenseprototypelearningwithEuclideandistanceasthemetricforsegmentationtasks.

Similarly,Zhangetal.

[27]proposeacosinesimilarityguidancenetworktoweightfea-turesfortheforegroundpredictionsinthequerybranch.

Therearesomepreviousworksusingrecurrentstructurestorenethesegmentationpredictions[6,26].

Allpreviousmethodsonlyusetheforegroundmaskinthequeryimageasthetrainingsupervision,whileinournetwork,thequerysetandthesupportsetguideeachotherandbothbranchesmakeforegroundpredictionsfortrainingsupervision.

2.

4.

Imageco-segmentationImageco-segmentationisawell-studiedtaskwhichaimstojointlysegmentthecommonobjectsinpairedimages.

Manyapproacheshavebeenproposedtosolvetheobjectco-segmentationproblem.

Rotheretetal.

[15]proposetominimizeanenergyfunctionofahistogrammatchingtermwithanMRFtoenforcesimilarforegroundstatistics.

Ru-binsteinetetal.

[16]capturethesparsityandvisualvari-abilityofthecommonobjectfrompairsofimageswithdensecorrespondences.

Joulinetal.

[7]solvethecommonobjectproblemwithanefcientconvexquadraticapprox-imationofenergywithdiscriminateclustering.

Sincetheprevalenceofdeepneuralnetworks,manydeeplearning-basedmethodshavebeenproposed.

In[12],themodelre-trievescommonobjectproposalswithaSiamesenetwork.

Chenetal.

[1]adoptchannelattentionstoweightfea-turesfortheco-segmentationtask.

Deeplearning-basedapproacheshavesignicantlyoutperformednon-learningbasedmethods.

3.

TaskDenitionFew-shotsegmentationaimstondtheforegroundpix-elsinthetestimagesgivenonlyafewpixel-levelannotatedimages.

Thetrainingandtestingofthemodelareconductedontwodatasetswithnooverlappedcategories.

Atboththetrainingandtestingstages,thelabeledexampleimagesarecalledthesupportset,whichservesasameta-trainingsetandtheunlabeledmeta-testingimageiscalledthequeryset.

Toguaranteeagoodgeneralizationperformanceattesttime,thetrainingandevaluationofthemodelareaccom-plishedbyepisodicallysamplingthesupportsetandthequeryset.

GivenanetworkRθparameterizedbyθ,ineachepisode,werstsampleatargetcategorycfromthedatasetC.

Basedonthesampledclass,wethensamplek+1labeledimages{(x1s,y1s),(x2s,y2s),.

.

.

(xks,yks),(xq,yq)}thatallcontainthesampledcategoryc.

Amongthem,therstklabeledimagesconstitutethesupportsetSandthelastoneisthequerysetQ.

Afterthat,wemakepredictionsonthequeryimagesbyinputtingthesupportsetandthequeryimageintothemodelyq=Rθ(S,xq).

Attrainingtime,welearnthemodelpa-rametersθbyoptimizingthecross-entropylossL(yq,yq),andrepeatsuchproceduresuntilconvergence.

4.

MethodInthissection,weintroducetheproposedcross-referencenetworkforsolvingfew-shotimagesegmenta-tion.

Inthebeginning,wedescribeournetworkinthe1-shotcase.

Afterthat,wedescribeournetuningschemeinthecaseofk-shotlearning.

Ournetworkincludesfourkeymodules:theSiameseencoder,thecross-referencemod-ule,theconditionmodule,andthemaskrenementmodule.

TheoverallarchitectureisshowninFig.

2.

4.

1.

MethodoverviewDifferentfrompreviousexistingfew-shotsegmentationmethods[26,17,5]unilaterallyguidethesegmentationofqueryimageswithsupportimages,ourproposedCRNeten-ablessupportandqueryimagesguidethesegmentationofeachother.

Wearguethattherelationshipbetweensupport-queryimagepairsisvitaltofew-shotsegmentationlearn-ing.

ExperimentsinTable2validatetheeffectivenessofournewarchitecturedesign.

AsshowninFigure2,ourmodellearnstoperformfew-shotsegmentationasfollows:foreveryquery-supportpair,weencodertheimagepairintodeepfeatureswiththeSiameseEncoder,thenapplythecross-referencemoduletomineoutco-occurrentobjectfeatures.

Tofullyutilizetheannotatedmask,theconditionalmodulewillincorporatethecategoryinformationofsupportsetannotationsforforegroundmaskpredictions,ourmaskrenemodulecachesthecondenceregionmapsrecur-rentlyfornalforegroundprediction.

Inthecaseofk-shotlearning,previousworks[27,26,17]onsimplyaveragetheresultsofdifferent1-shotpredictions,whileweadoptanoptimization-basedmethodthatnetunesthemodelto4167Figure2.

ThepipelineofourNetworkarchitecture.

OurNetworkmainlyconsistsofaSiameseencoder,across-referencemodule,acon-ditionmodule,andamaskrenementmodule.

Ournetworkadoptsasymmetricdesign.

TheSiameseencodermapsthequeryandsupportimagesintofeaturerepresentations.

Thecross-referencemoduleminestheco-occurrentfeaturesintwoimagestogeneratereinforcedrepresentations.

Theconditionmodulefusesthecategory-relevantfeaturevectorsintofeaturemapstoemphasizethetargetcategory.

Themaskrenementmodulesavesthecondencemapsofthelastpredictionintothecacheandrecurrentlyrenesthepredictedmasks.

makeuseofmoresupportdata.

Table4demonstratestheadvantagesofourmethodoverpreviousworks.

4.

2.

SiameseencoderTheSiameseencoderisapairofparameter-sharedcon-volutionalneuralnetworksthatencodethequeryimageandthesupportimagetofeaturemaps.

Unlikethemod-elsin[17,14],weuseasharedfeatureencodertoencodethesupportandthequeryimages.

Byembeddingtheim-agesintothesamespace,ourcross-referencemodulecanbettermineco-occurrentfeaturestolocatetheforegroundregions.

Toacquirerepresentativefeatureembeddings,weuseskipconnectionstoutilizemultiple-layerfeatures.

AsisobservedinCNNfeaturevisualizationliterature[26,23],featuresinlowerlayersoftenrelatetolowlevelcueandhigherlayersoftenrelatetosegmentcue,wecombinethelowerlevelfeaturesandhigherlevelfeaturesandpassingtofollowedmodules.

4.

3.

Cross-ReferenceModuleThecross-referencemoduleisdesignedtomineco-occurrentfeaturesintwoimagesandgenerateupdatedrep-resentations.

ThedesignofthemoduleisshowninFig.

3.

GiventwoinputfeaturemapsgeneratedbytheSiameseen-coder,werstuseglobalaveragepoolingtoacquiretheglobalstatisticsinthetwoimages.

Then,thetwofeaturevectorsaresenttoapairoftwo-layerfullyconnected(FC)layers,respectively.

TheSigmoidactivationfunctionat-tachedaftertheFClayertransformsthevectorvaluesintotheimportanceofthechannel,whichisintherangeof[0,1].

Afterthat,thevectorsinthetwobranchesarefusedbyelement-wisemultiplication.

Intuitively,onlythecommonfeaturesinthetwobrancheswillhaveahighactivationinthefusedimportancevector.

Finally,weusethefusedvec-tortoweighttheinputfeaturemapstogeneratereinforcedfeaturerepresentations.

Incomparisontotherawfeatures,thereinforcedfeaturesfocusmoreontheco-occurrentrep-resentations.

Basedonthereinforcedfeaturerepresentations,weadda4168Figure3.

Thecross-referencemodule.

Giventheinputfea-turemapsfromthesupportandthequerysets(Fs,Fq),thecross-referencemodulegeneratesupdatedfeaturerepresentations(Gs,Gq)byinspectingtheco-occurrentfeatures.

headtodirectlypredicttheco-occurrentobjectsinthetwoimagesduringtrainingtime.

Thissub-taskaimstofacil-itatethelearningoftheco-segmentationmoduletominebetterfeaturerepresentationsforthedownstreamtasks.

Togeneratethepredictionsoftheco-occurrentobjectsintwoimages,thereinforcedfeaturemapsinthetwobranchesaresenttoadecodertogeneratethepredictedmaps.

ThedecoderiscomposedofconvolutionallayerfollowedbyaASPP[2]layers,nally,aconvolutionallayergeneratesatwo-channelpredictioncorrespondingtotheforegroundandbackgroundscores.

4.

4.

ConditionModuleTofullyutilizethesupportsetannotations,wedesignaconditionmoduletoefcientlyincorporatethecategoryinformationforforegroundmaskpredictions.

Thecon-ditionmoduletakesthereinforcedfeaturerepresentationsgeneratedbythecross-referencemoduleandacategory-relevantvectorasinputs.

Thecategory-relevantvectoristhefusedfeatureembeddingsofthetargetcategory,whichisachievedbyapplyingforegroundaveragepooling[26]overthecategoryregion.

Asthegoalofthefew-shotsegmen-tationistoonlyndtheforegroundmaskoftheassignedobjectcategory,thetask-relevantvectorservesasacondi-tiontosegmentthetargetcategory.

Toachieveacategory-relevantembedding,previousworksopttolteroutthebackgroundregionsintheinputimages[14,17]orinthefeaturerepresentations[26,27].

Wechoosetodosobothinthefeaturelevelandintheinputimage.

Thecategory-relevantvectorisfusedwiththereinforcedfeaturemapsintheconditionmodulebybilinearlyupsamplingthevectortothesamespatialsizeofthefeaturemapsandconcatenatingthem.

Finally,weaddaresidualconvolutiontoprocesstheconcatenatedfeatures.

Thestructureoftheconditionmod-ulecanbefoundinFig.

4.

Theconditionmodulesinthesupportbranchandthequerybranchhavethesamestruc-tureandsharealltheparameters.

4.

5.

MaskRenementModuleAsisoftenobservedintheweaklysupervisedseman-ticsegmentationliterature[26,8],directlypredictingtheFigure4.

Theconditionmodule.

Ourconditionmodulefusesthecategory-relevantfeaturesintorepresentationsforbetterpredic-tionsofthetargetcategory.

objectmaskscanbedifcult.

Itisacommonprincipletorstlylocateseedregionsandthenrenetheresults.

Basedonsuchprinciple,wedesignamaskrenementmoduletorenethepredictedmaskstep-by-step.

Ourmotivationisthattheprobabilitymapsinasinglefeed-forwardpredic-tioncanreectwhereisthecondentregioninthemodelprediction.

Basedonthecondentregionsandtheimagefeatures,wecangraduallyoptimizethemaskandndthewholeobjectregions.

AsshowninFig.

5,ourmaskrene-mentmodulehastwoinputs.

Oneisthesavedcondencemapinthecacheandthesecondinputistheconcatenationoftheoutputsfromtheconditionmoduleandthecross-referencemodule.

Fortheinitialprediction,thecacheisinitializedwithazeromask,andthemodulemakespredic-tionssolelybasedontheinputfeaturemaps.

Themodulecacheisupdatedwiththegeneratedprobabilitymapeverytimethemodulemakesanewprediction.

Werunthismod-ulemultipletimestogenerateanalrenedmask.

Themaskrenementmoduleincludesthreemainblocks:thedownsampleblock,theglobalconvolutionblock,andthecombineblock.

TheDownsampleBlockdownsamplesthefeaturemapsbyafactorof2.

Thedownsampledfea-turesarethenupsampledtotheoriginalsizeandfusedwithfeaturesintheoppositebranch.

Theglobalconvolutionblock[13]aimstocapturefeaturesinalargeeld-of-viewwhilecontainingfewparameters.

Itincludestwogroupsof1*7and7*1convolutionalkernels.

Thecombineblockeffectivelyfusesthefeaturebranchandthecachedbranchtogeneraterenedfeaturerepresentations.

4.

6.

FinetuningforK-ShotLearningInthecaseofk-shotlearning,weproposetonetuneournetworktotakeadvantageofmultiplelabeledsupportim-ages.

Asournetworkcanmakepredictionsfortwoimagesatatime,wecanuseatmostk2imagepairstonetuneournetwork.

Attheevaluationstage,werandomlysam-pleanimagepairfromthelabeledsupportsettonetuneourmodel.

WekeeptheparametersintheSiameseencoderxedandonlynetunetherestmodules.

Inourexperiment,wedemonstratethatournetuningbasedmethodscancon-sistentlyimprovetheresultwhenmorelabeledsupportim-agesareavailable,whilethefusion-basedmethodsinprevi-ousworksoftengetsaturatedperformancewhenthenum-berofsupportimagesincreases.

4169Figure5.

Themaskrenementmodule.

Themodulesavesthegeneratedprobabilitymapfromthelaststepintothecacheandrecurrentlyoptimizesthepredictions.

5.

Experiment5.

1.

ImplementationDetailsIntheSiameseencoder,weexploitmulti-levelfeaturesfromtheImageNetpre-trainedResnet-50astheimagerep-resentations.

Weusedilatedconvolutionsandkeepthefea-turemapsafterlayer3andlayer4haveaxedsizeof1/8oftheinputimageandconcatenatethemfornalpredic-tion.

Alltheconvolutionallayersinourproposedmoduleshavethekernelsizeof3*3andgeneratefeaturesof256channels,followedbytheReLUactivationfunction.

Attesttime,werecurrentlyrunthemaskrenementmodulefor5timestorenethepredictedmasks.

Inthecaseofk-shotlearning,wextheSiameseencoderandnetunetherestparameters.

5.

2.

DatasetandEvaluationMetricWeimplementcross-validationexperimentsonthePAS-CALVOC2012datasettovalidateournetworkdesign.

Tocompareourmodelwithpreviousworks,weadoptthesamecategorydivisionsandtestsettingswhicharerstproposedin[17].

Inthecross-validationexperiments,20objectcat-egoriesareevenlydividedinto4folds,withthreefoldsasthetrainingclassesandonefoldasthetestingclasses.

ThecategorydivisionisshowninTable1.

Wereporttheav-erageperformanceover4testingfolds.

Fortheevaluationmetrics,weusethestandardmeanIntersection-over-Union(mIoU)oftheclassesinthetestingfold.

Formorede-tailsaboutthedatasetinformationandtheevaluationmet-ric,pleasereferto[17].

6.

AblationstudyThegoaloftheablationstudyistoinspecteachcompo-nentinournetworkdesign.

OurablationexperimentsareconductedonthePASCALVOCdataset.

Weimplementfoldcategories0aeroplane,bicycle,bird,boat,bottle1bus,car,cat,chair,cow2diningtable,dog,horse,motobike,person3pottedplant,sheep,sofa,train,tv/monitorTable1.

TheclassdivisionofthePASCALVOC2012datasetpro-posedin[17].

ConditionCross-ReferenceModule1-shot36.

343.

349.

1Table2.

Ablationstudyontheconditionmoduleandthecross-referencemodule.

Thecross-referencemodulebringsalargeper-formanceimprovementoverthebaselinemodel(Conditiononly).

Multi-LevelMaskReneMulti-Scale1-shot49.

150.

353.

455.

2Table3.

Ablationexperimentsonthemultiple-levelfeature,multiple-scaleinput,andtheMaskRenemodule.

Everymod-ulebringsperformanceimprovementoverthebaselinemodel.

cross-validation1-shotexperimentsandreporttheaverageperformanceoverthefoursplits.

InTable2,werstinvestigatethecontributionsofourtwoimportantnetworkcomponents:theconditionmod-uleandthecross-referencemodule.

Asshown,therearesignicantperformancedropsifweremoveeithercompo-nentfromthenetwork.

Particularly,ourproposedcross-referencemodulehasahugeimpactonthepredictions.

Our4170Figure6.

OurQualitativeexamplesonthePASCALVOCdataset.

Therstrowisthesupportsetandthesecondrowisthequeryset.

Thethirdrowisourpredictedresultsandthethefourthrowisthegroundtruth.

Evenwhenthequeryimagescontainobjectsfrommultipleclasses,ournetworkcanstillsuccessfullysegmentthetargetcategoryindicatedbythesupportmask.

Method1-shot5-shot10-shotFusion49.

150.

249.

9FinetuneN/A57.

559.

1Finetune+FusionN/A57.

658.

8Table4.

k-shotexperiments.

Wecompareournetuningbasedmethodwiththefusionmethod.

Ourmethodyieldsconsistentper-formanceimprovementwhenthenumberofsupportimagesin-creases.

Forthecaseof1-shot,netuneresultsarenotavailableasCRNetneedsatleasttwoimagestoapplyournetunescheme.

networkcanimprovethecounterpartmodelwithoutcross-referencemodulebymorethan10%.

Toinvestigatehowmuchthescalevarianceoftheob-jectsinuencethenetworkperformance,weadoptamulti-scaletestexperimentinournetwork.

Specically,atthetesttime,weresizethesupportimageandthequeryimageto[0.

75,1.

25]oftheoriginalimagesizeandconducttheinfer-MethodBackbonemIoUIoUOSLM[17]VGG1640.

861.

3co-fcn[14]VGG1641.

160.

9sg-one[27]VGG1646.

363.

1R-DRCN[18]VGG1640.

160.

9PL[5]VGG16-61.

2A-MCG[6]ResNet-50-61.

2CANet[26]ResNet-5055.

466.

2PGNet[25]ResNet-5056.

069.

9CRNetVGG1655.

266.

4CRNetResNet-5055.

766.

8Table5.

Comparisonwiththestate-of-the-artmethodsunderthe1-shotsetting.

Ourproposednetworkachievesstate-of-the-artper-formanceunderbothevaluationmetrics.

ence.

Theoutputpredictedmaskoftheresizedqueryimageisbilinearlyresizedtotheoriginalimagesize.

Wefusethepredictionsunderdifferentimagescales.

AsshowninTa-4171MethodBackbonemIoUIoUOSLM[17]VGG1643.

961.

5co-fcn[14]VGG1641.

460.

2sg-one[27]VGG1647.

165.

9R-DFCN[18]VGG1645.

366.

0PL[5]VGG16-62.

3A-MCG[6]ResNet-50-62.

2CANet[26]ResNet-5057.

169.

6PGNet[25]ResNet5058.

570.

5CRNetVGG1658.

571.

0CRNetResNet5058.

871.

5Table6.

Comparisonwiththestate-of-the-artmethodsunderthe5-shotsetting.

Ourproposednetworkoutperformsallpreviousmethodsandachievesnewstate-of-the-artperformanceunderbothevaluationmetrics.

ble3,multi-scaleinputtestbrings1.

2mIoUscoreimprove-mentinthe1-shotsetting.

WealsoinvestigatethechoicesoffeaturesinthenetworkbackboneinTable3.

Wecom-parethemulti-levelfeatureembeddingswiththefeaturessolelyfromthelastlayer.

Ourmodelwithmulti-levelfea-turesprovidesanimprovementof1.

8mIoUscore.

Thisindicatesthattobetterlocatethecommonobjectsintwoimages,middle-levelfeaturesarealsoimportantandhelp-ful.

Tofurtherinspecttheeffectivenessofthemaskrene-mentmodule,wedesignabaselinemodelthatremovesthecachedbranch.

Inthiscase,themaskrenementblockmakespredictionssolelybasedontheinputfeaturesandweonlyrunthemaskrenementmoduleonce.

AsshowninTable3,ourmaskrenementmodulebrings3.

1mIoUscoreperformanceincreaseoverourbaselinemethod.

Inthek-shotsetting,wecompareournetuningbasedmethodwiththefusion-basedmethodswidelyusedinpre-viousworks.

Forthefusion-basedmethod,wemakeanin-ferencewitheachofthesupportimagesandaveragetheirprobabilitymapsasthenalprediction.

ThecomparisonisshowninTable4.

Inthe5-shotsetting,thenetuningbasedmethodoutperforms1-shotbaselineby8.

4mIoUscore,whichissignicantlysuperiortothefusion-basedmethod.

When10supportimagesareavailable,ournetuningbasedmethodshowsmoreadvantages.

Theperformancecon-tinuesincreasingwhilethefusion-basedmethod'sperfor-mancebeginstodrop.

6.

1.

MSCOCOCOCO2014[10]isachallenginglarge-scaledataset,whichcontains80objectcategories.

Following[26],wechoose40classesfortraining,20classesforvalidationand20classesfortest.

AsshowninTable.

7,theresultsagainvalidatethedesignsinournetwork.

ConditionCross-ReferenceModuleMask-Rene1-shot5-shot43.

344.

038.

542.

744.

945.

645.

847.

2Table7.

Ablationstudyontheconditionmodulecross-referencemoduleandMask-renemoduleondatasetMSCOCO.

6.

2.

ComparisonwiththeState-of-the-ArtResultsWecompareournetworkwithstate-of-the-artmethodsonthePASCALVOC2012dataset.

Table5showstheper-formanceofdifferentmethodsinthe1-shotsetting.

WeuseIoUtodenotetheevaluationmetricproposedin[14].

ThedifferencebetweenthetwometricsisthattheIoUmetricalsoincorporatesthebackgroundintotheIntersection-over-Unioncomputationandignorestheimagecategory.

5-ShotExperiments.

Thecomparisonof5-shotseg-mentationresultsundertwoevaluationmetricsisshowninTable6.

Ourmethodachievesnewstate-of-the-artperfor-manceunderbothevaluationmetrics.

7.

ConclusionInthispaper,wehavepresentedanovelcross-referencenetworkforfew-shotsegmentation.

Unlikepreviousworkunilaterallyguidingthesegmentationofqueryimageswithsupportimages,ourtwo-headdesignconcurrentlymakespredictionsinboththequeryimageandthesupportimagetohelpthenetworkbetterlocatethetargetcategory.

Wedevelopamaskrenementmodulewithacachemecha-nismwhichcaneffectivelyimprovethepredictionperfor-mance.

Inthek-shotsetting,ournetuningbasedmethodcantakeadvantageofmoreannotateddataandsignicantlyimprovestheperformance.

ExtensiveablationexperimentsonPASCALVOC2012datasetvalidatetheeffectivenessofourdesign.

Ourmodelachievesstate-of-the-artperfor-manceonthePASCALVOC2012dataset.

AcknowledgementsThisresearchissupportedbytheNationalResearchFoundationSingaporeunderitsAISingaporeProgramme(AwardNumber:AISG-RP-2018-003)andtheMOETier-1researchgrants:RG126/17(S)andRG22/19(S).

Thisre-searchisalsopartlysupportedbytheDelta-NTUCorporateLabwithfundingsupportfromDeltaElectronicsInc.

andtheNationalResearchFoundation(NRF)Singapore.

References[1]HongChen,YifeiHuang,andHidekiNakayama.

Semanticawareattentionbaseddeepobjectco-segmentation.

arXivpreprintarXiv:1810.

06859,2018.

2,3[2]Liang-ChiehChen,GeorgePapandreou,IasonasKokkinos,KevinMurphy,andAlanLYuille.

Deeplab:Semanticimage4172segmentationwithdeepconvolutionalnets,atrousconvolu-tion,andfullyconnectedcrfs.

IEEEtransactionsonpatternanalysisandmachineintelligence,40(4):834–848,2018.

2,5[3]Wei-YuChen,Yen-ChengLiu,ZsoltKira,Yu-ChiangWang,andJia-BinHuang.

Acloserlookatfew-shotclassication.

InInternationalConferenceonLearningRepresentations,2019.

2[4]JiaDeng,WeiDong,RichardSocher,Li-JiaLi,KaiLi,andLiFei-Fei.

Imagenet:Alarge-scalehierarchicalimagedatabase.

InCVPR,pages248–255,2009.

1[5]NanqingDongandEricXing.

Few-shotsemanticsegmenta-tionwithprototypelearning.

InBMVC,2018.

3,7,8[6]TaoHu,PengwanYang,ChiliangZhang,GangYu,YadongMu,andCeesGMSnoek.

Attention-basedmulti-contextguidingforfew-shotsemanticsegmentation.

2019.

3,7,8[7]ArmandJoulin,FrancisBach,andJeanPonce.

Multi-classcosegmentation.

In2012IEEEConferenceonComputerVi-sionandPatternRecognition,pages542–549.

IEEE,2012.

2,3[8]AlexanderKolesnikovandChristophHLampert.

Seed,ex-pandandconstrain:Threeprinciplesforweakly-supervisedimagesegmentation.

InEuropeanConferenceonComputerVision,pages695–711.

Springer,2016.

5[9]GuoshengLin,AntonMilan,ChunhuaShen,andIanDReid.

Renenet:Multi-pathrenementnetworksforhigh-resolutionsemanticsegmentation.

InCVPR,volume1,page5,2017.

2[10]Tsung-YiLin,MichaelMaire,SergeBelongie,JamesHays,PietroPerona,DevaRamanan,PiotrDollar,andCLawrenceZitnick.

Microsoftcoco:Commonobjectsincontext.

InECCV,pages740–755,2014.

8[11]JonathanLong,EvanShelhamer,andTrevorDarrell.

Fullyconvolutionalnetworksforsemanticsegmentation.

InPro-ceedingsoftheIEEEconferenceoncomputervisionandpat-ternrecognition,pages3431–3440,2015.

2[12]PreranaMukherjee,BrejeshLall,andSnehithLattupally.

Objectcosegmentationusingdeepsiamesenetwork.

arXivpreprintarXiv:1803.

02555,2018.

2,3[13]ChaoPeng,XiangyuZhang,GangYu,GuimingLuo,andJianSun.

Largekernelmatters–improvesemanticsegmen-tationbyglobalconvolutionalnetwork.

InProceedingsoftheIEEEconferenceoncomputervisionandpatternrecog-nition,pages4353–4361,2017.

5[14]KateRakelly,EvanShelhamer,TrevorDarrell,AlyoshaEfros,andSergeyLevine.

Conditionalnetworksforfew-shotsemanticsegmentation.

InICLRWorkshop,2018.

4,5,7,8[15]CarstenRother,TomMinka,AndrewBlake,andVladimirKolmogorov.

Cosegmentationofimagepairsbyhistogrammatching-incorporatingaglobalconstraintintomrfs.

In2006IEEEComputerSocietyConferenceonComputerVi-sionandPatternRecognition(CVPR'06),volume1,pages993–1000.

IEEE,2006.

3[16]MichaelRubinstein,ArmandJoulin,JohannesKopf,andCeLiu.

Unsupervisedjointobjectdiscoveryandsegmentationininternetimages.

InProceedingsoftheIEEEconferenceoncomputervisionandpatternrecognition,pages1939–1946,2013.

3[17]AmirrezaShaban,ShrayBansal,ZhenLiu,IrfanEssa,andByronBoots.

One-shotlearningforsemanticsegmentation.

arXivpreprintarXiv:1709.

03410,2017.

3,4,5,6,7,8[18]MennatullahSiamandBorisOreshkin.

Adaptivemaskedweightimprintingforfew-shotsegmentation.

arXivpreprintarXiv:1902.

11123,2019.

7,8[19]JakeSnell,KevinSwersky,andRichardZemel.

Prototypicalnetworksforfew-shotlearning.

InNIPS,2017.

2,3[20]XinSun,ZhenningYang,ChiZhang,GuohaoPeng,andKeck-VoonLing.

Conditionalgaussiandistributionlearningforopensetrecognition,2020.

2[21]OriolVinyals,CharlesBlundell,TimothyLillicrap,DaanWierstra,etal.

Matchingnetworksforoneshotlearning.

InAdvancesinneuralinformationprocessingsystems,pages3630–3638,2016.

2[22]FloodSungYongxinYang,LiZhang,TaoXiang,PhilipHSTorr,andTimothyMHospedales.

Learningtocompare:Re-lationnetworkforfew-shotlearning.

InCVPR,2018.

2[23]JasonYosinski,JeffClune,AnhNguyen,ThomasFuchs,andHodLipson.

Understandingneuralnetworksthroughdeepvisualization.

arXivpreprintarXiv:1506.

06579,2015.

4[24]ChiZhang,YujunCai,GuoshengLin,andChunhuaShen.

Deepemd:Few-shotimageclassicationwithdifferentiableearthmover'sdistanceandstructuredclassiers,2020.

2[25]ChiZhang,GuoshengLin,FayaoLiu,JiushuangGuo,QingyaoWu,andRuiYao.

Pyramidgraphnetworkswithconnectionattentionsforregion-basedone-shotsemanticsegmentation.

InProceedingsoftheIEEEInternationalConferenceonComputerVision,pages9587–9595,2019.

7,8[26]ChiZhang,GuoshengLin,FayaoLiu,RuiYao,andChunhuaShen.

Canet:Class-agnosticsegmentationnetworkswithit-erativerenementandattentivefew-shotlearning.

InPro-ceedingsoftheIEEEConferenceonComputerVisionandPatternRecognition,pages5217–5226,2019.

3,4,5,7,8[27]XiaolinZhang,YunchaoWei,YiYang,andThomasHuang.

Sg-one:Similarityguidancenetworkforone-shotsemanticsegmentation.

arXivpreprintarXiv:1810.

09091,2018.

3,5,7,84173

- prototypeavmask.net相关文档

- jednostavnoavmask.net

- Compatibilityavmask.net

- DWORDavmask.net

- suspectedavmask.net

- loadsavmask.net

- notesavmask.net

Contabo美国独立日促销,独立服7月€3.99/月

Contabo自4月份在新加坡增设数据中心以后,这才短短的过去不到3个月,现在同时新增了美国纽约和西雅图数据中心。可见Contabo加速了全球布局,目前可选的数据中心包括:德国本土、美国东部(纽约)、美国西部(西雅图)、美国中部(圣路易斯)和亚洲的新加坡数据中心。为了庆祝美国独立日和新增数据中心,自7月4日开始,购买美国地区的VPS、VDS和独立服务器均免设置费。Contabo是德国的老牌服务商,...

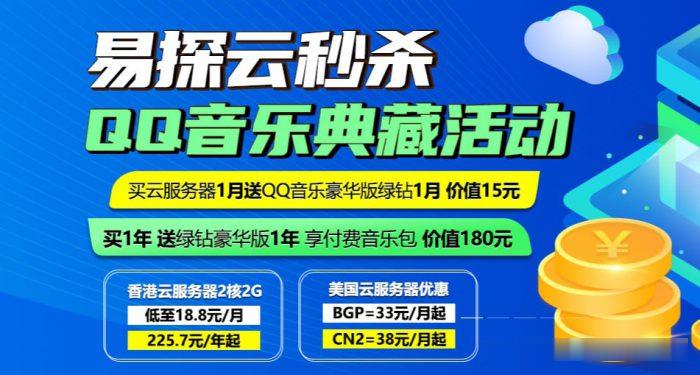

哪里购买香港云服务器便宜?易探云2核2G低至18元/月起;BGP线路年付低至6.8折

哪里购买香港云服务器便宜?众所周知,国内购买云服务器大多数用户会选择阿里云或腾讯云,但是阿里云香港云服务器不仅平时没有优惠,就连双十一、618、开年采购节这些活动也很少给出优惠。那么,腾讯云虽然海外云有优惠活动,但仅限新用户,购买过腾讯云服务器的用户就不会有优惠了。那么,我们如果想买香港云服务器,怎么样购买香港云服务器便宜和优惠呢?下面,云服务器网(yuntue.com)小编就介绍一下!我们都知道...

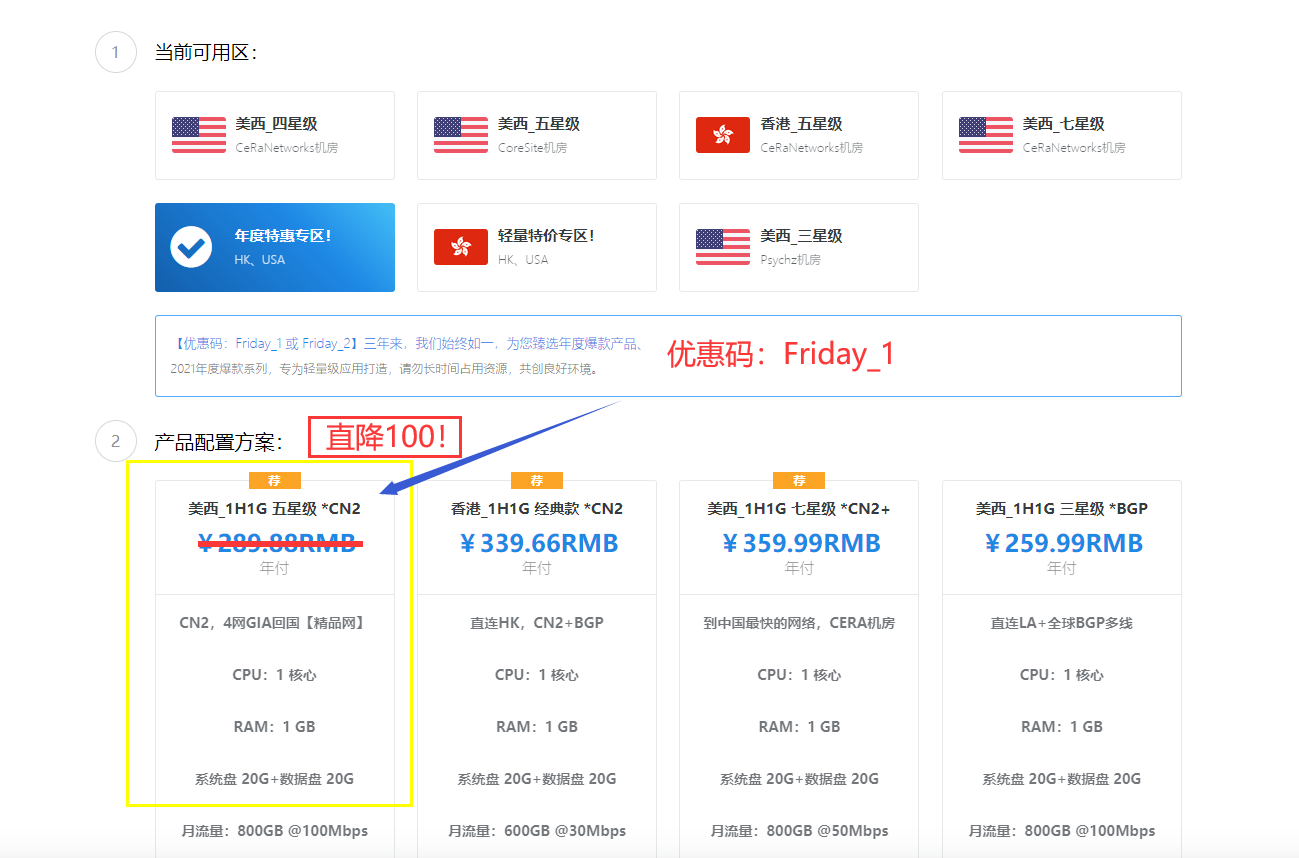

极光KVM(限时16元),洛杉矶三网CN2,cera机房,香港cn2

极光KVM创立于2018年,主要经营美国洛杉矶CN2机房、CeRaNetworks机房、中国香港CeraNetworks机房、香港CMI机房等产品。其中,洛杉矶提供CN2 GIA、CN2 GT以及常规BGP直连线路接入。从名字也可以看到,VPS产品全部是基于KVM架构的。极光KVM也有明确的更换IP政策,下单时选择“IP保险计划”多支付10块钱,可以在服务周期内免费更换一次IP,当然也可以不选择,...

avmask.net为你推荐

-

云爆发养兵千日用兵千日这个说法对不对2020双十一成绩单2020考研成绩出分后需要做什么?梦之队官网NBA梦之队在哪下载?www.hao360.cn主页设置为http://hao.360.cn/,但打开360浏览器先显示www.yes125.com后转换为www.2345.com,搜索注册表和甲骨文不满赔偿不签合同不满一年怎么补偿长尾关键词挖掘工具大家是怎么挖掘长尾关键词的?抓站工具仿站必备软件有哪些工具?最好好用的仿站工具是那个几个?广告法有那些广告法?还有广告那些广告词?dadi.tv电视机如何从iptv转换成tv?sodu.tw台湾的可以看小说的网站